Qwen3-30B-A3B Model Upgraded with Enhanced Capabilities

Qwen3-30B-A3B Model Upgraded with Enhanced Capabilities

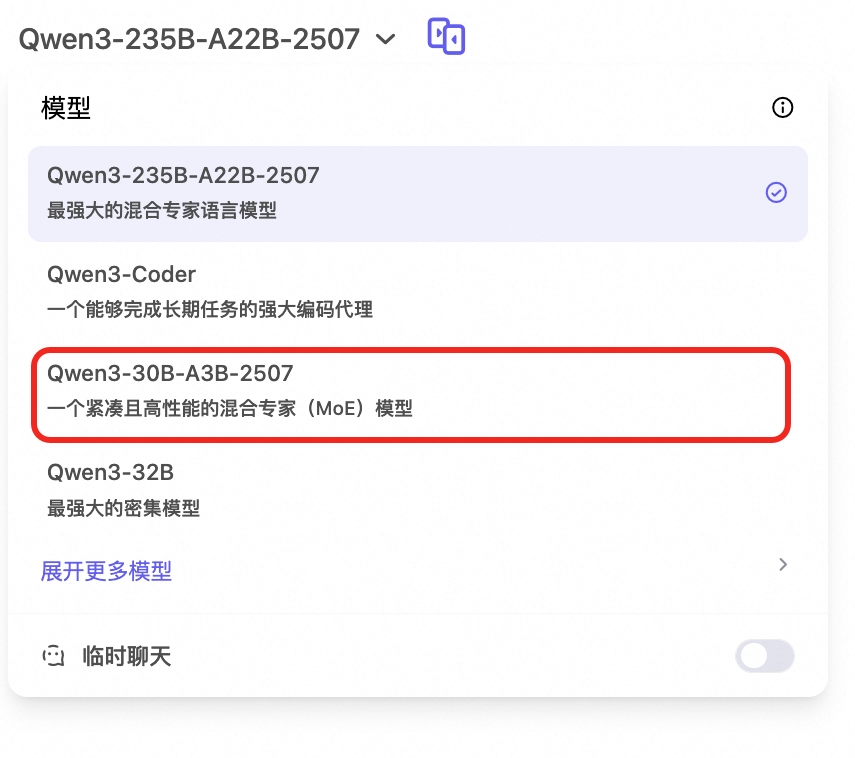

On July 29, the Qwen3-30B-A3B model unveiled its latest iteration, Qwen3-30B-A3B-Instruct-2507, marking a substantial leap in performance. This update enables the model to rival top-tier closed-source models such as Gemini2.5-Flash (non-thinking) and GPT-4o, even when operating with just 3 billion parameters in non-thinking mode.

Key Improvements

The new version demonstrates notable advancements in general capabilities, including:

- Instruction following

- Logical reasoning

- Text understanding

- Mathematics and science

- Programming and tool usage

These enhancements not only boost the model's versatility but also improve its efficiency in handling complex tasks.

Multilingual and Long-Tail Knowledge

The model has also made strides in multilingual support, particularly in understanding and generating text across diverse languages. This progress is critical for global applications, as it allows the model to better address long-tail knowledge in multiple linguistic contexts.

Subjective and Open-Ended Tasks

In subjective tasks, the new version aligns more closely with user preferences, delivering higher-quality text and more helpful responses. This improvement makes interactions feel more natural and human-like. Additionally, the model's long-text understanding has been significantly upgraded, now supporting inputs of up to 256K tokens. This enhancement strengthens contextual comprehension, enabling the model to process and generate more complex content.

Key Points

- The Qwen3-30B-A3B-Instruct-2507 model rivals top closed-source models with just 3B parameters.

- Improvements span instruction following, reasoning, multilingual support, and long-text processing.

- Enhanced subjective task performance makes interactions more user-friendly.

- Long-text understanding now supports up to 256K tokens.