Qwen Lab Unveils OmniAudio: Spatial Sound from 360° Videos

The field of immersive technology has taken a significant leap forward with Qwen Lab's introduction of OmniAudio, a novel system capable of generating spatial audio from 360-degree videos. This development promises to transform virtual reality experiences by creating soundscapes that perfectly match panoramic visuals.

Breaking New Ground in Spatial Audio

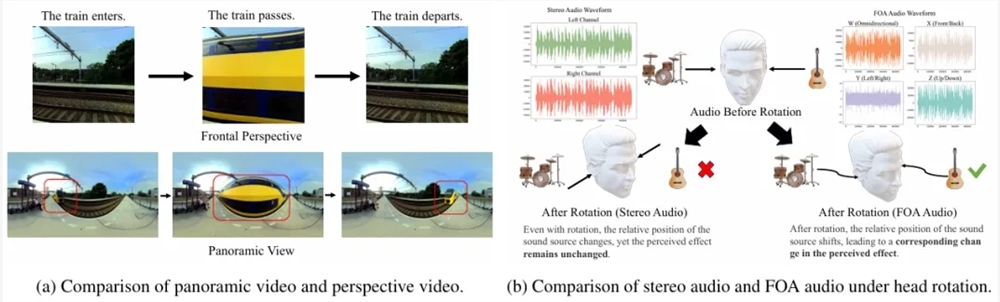

Traditional audio generation techniques have struggled to keep pace with advances in 360° video technology. Most existing solutions produce flat, non-spatial sound that fails to capture the directional richness of panoramic content. OmniAudio changes this paradigm by outputting First-order Ambisonics (FOA) - a four-channel format (W, X, Y, Z) that accurately reproduces 3D sound localization.

The Challenge of Data Scarcity

One major obstacle in developing this technology was the lack of paired datasets containing both 360° video and corresponding spatial audio. Qwen Lab's research team addressed this by creating the Sphere360 dataset, an extensive collection featuring:

- Over 103,000 real-world video clips

- 288 distinct audio event categories

- 288 hours of total content

The dataset underwent rigorous quality control measures to ensure precise alignment between visual and audio components.

How OmniAudio Works

The system employs a sophisticated two-stage training approach:

- Self-supervised pretraining: Leverages large-scale non-spatial audio resources converted into "pseudo-FOA" format using advanced encoding techniques.

- Supervised fine-tuning: Combines dual-branch video representation with masked flow-matching to refine spatial accuracy.

This hybrid approach allows the model to first learn general audio patterns before specializing in precise directional sound reproduction.

Performance That Speaks Volumes

Testing on benchmark datasets yielded impressive results:

- Significant reductions in key metrics (FD, KL, ΔAngular) on YT360-Test set

- Superior performance on Sphere360-Bench evaluations

- High scores in human assessments for spatial quality and visual-audio alignment

The system particularly excels at maintaining accurate sound positioning during head movements - a crucial factor for VR applications.

Resources for Developers

The research team has made their work accessible through:

Key Points

- OmniAudio generates realistic spatial audio from panoramic videos using FOA format.

- The Sphere360 dataset addresses critical data scarcity in this emerging field.

- Two-stage training combines self-supervised learning with supervised refinement.

- Testing shows significant improvements over existing solutions in both objective metrics and human evaluation.