Quark AI Outperforms Rivals in College Entrance Math Exam

As the 2025 national college entrance exams concluded, an intriguing parallel test unfolded—evaluating whether artificial intelligence could tackle the challenging math portions. The results revealed clear frontrunners among domestic AI models.

In comprehensive assessments conducted by financial media LanJing and tech outlet Four Trees Relative Theory, Quark emerged as the standout performer. Competing against DouBao, YuanBao, and ChatGPT using the actual 2025 National College Entrance Exam Math Test (Volume I), Quark achieved scores of 145 and 146 respectively—the highest marks recorded.

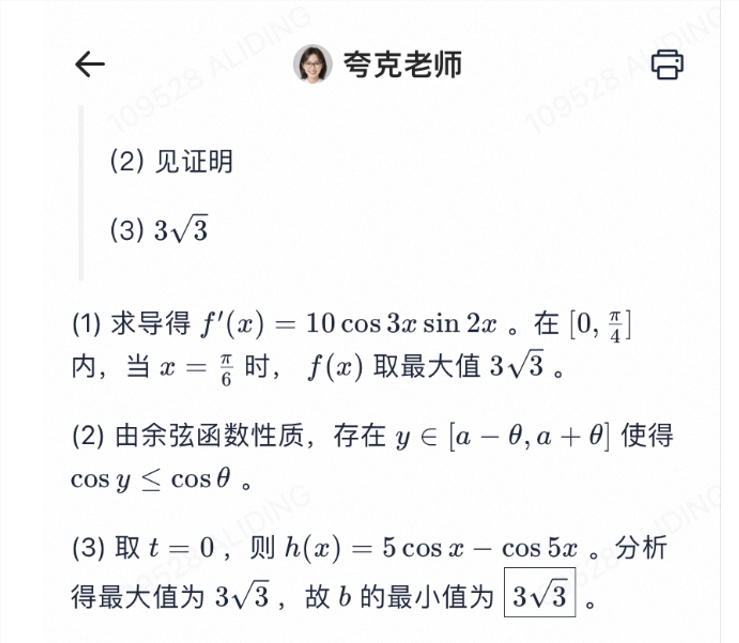

The tests operated under strict conditions: internet search functions disabled, with models relying solely on their trained knowledge and reasoning capabilities. In multiple-choice and fill-in-the-blank sections, Quark demonstrated remarkable 93% accuracy, though all AI systems stumbled on question six due to misinterpretations of vector coordinates in diagrams.

Speed proved another differentiator. Quark solved problems in approximately 4 minutes per question, outpacing competitors that averaged 6 minutes. This efficiency advantage could prove crucial for real-world applications where rapid problem-solving matters.

Quark's performance stems from its proprietary "Learning Spirit Big Model," built upon Alibaba's Tongyi Qianwen framework. The system leverages billions of learning materials and specialized post-training to excel at complex scientific problems. Users report the model offers a uniquely interactive learning experience—not just providing answers but guiding through problem-solving processes.

Could this mark a turning point for educational AI? While traditional tutoring focuses on rote memorization, Quark's approach suggests AI might eventually teach students how to think mathematically rather than simply recall formulas.

Key Points

- Quark scored highest (145/146) in independent evaluations of college entrance math exam performance

- Demonstrated 93% accuracy in objective questions while solving problems faster than competitors

- All models struggled with visual interpretation of vector coordinate problems

- Powered by "Learning Spirit Big Model" with billions of training materials

- Potential to transform how students learn mathematical reasoning