Quark AI Outperforms Rivals in College Entrance Math Exam

As the 2025 college entrance exams concluded, a parallel experiment tested whether artificial intelligence could tackle the challenging math sections. The results revealed clear leaders in China's competitive AI landscape.

In controlled evaluations by financial media LanJing and tech analysts Four Trees Relative Theory, Quark emerged as the standout performer. Competing against DouBao, YuanBao, and ChatGPT, all models attempted the National College Entrance Exam Math Test (Volume I) under identical conditions—without internet access and using only deep thinking mode.

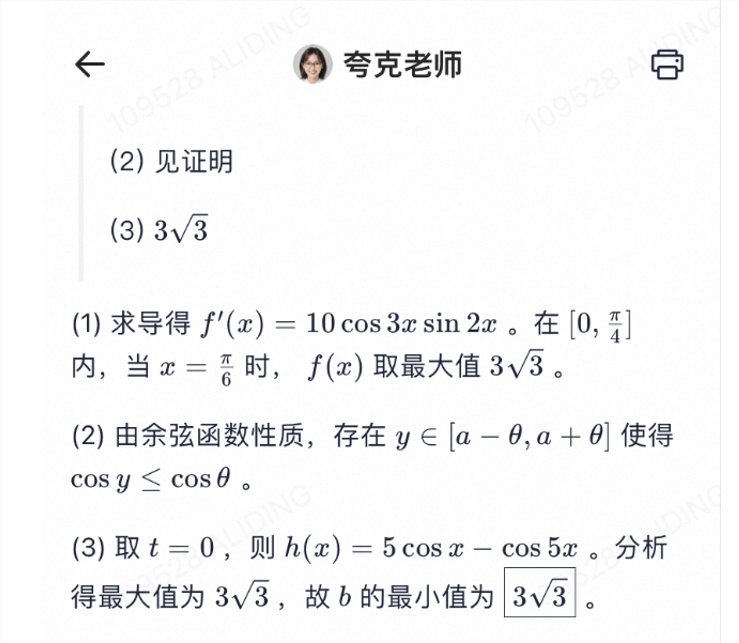

Breaking Down the Scores Quark achieved an impressive 145 points in LanJing's assessment, demonstrating particular strength in multiple-choice and fill-in-the-blank questions with a 93% accuracy rate. Interestingly, all AI models stumbled on question six due to difficulties interpreting vector coordinates in diagrams.

The second evaluation confirmed Quark's dominance with a score of 146 points. Beyond accuracy, Quark solved problems remarkably faster—completing questions in just four minutes compared to the six-minute average of its competitors. This combination of speed and precision suggests significant advances in algorithmic processing.

The Technology Behind the Performance Quark's capabilities stem from its "Learning Spirit Big Model," built upon Alibaba's Tongyi Qianwen framework. The system leverages billions of learning materials and specialized post-training techniques that excel at complex scientific problem-solving. This architecture appears particularly effective at mimicking human-like heuristic learning approaches.

Could this performance mark a turning point for educational AI applications? While these results demonstrate impressive technical capabilities, they also highlight remaining challenges—like interpreting graphical data—that separate artificial from human intelligence.

Key Points

- Quark scored highest (145 & 146) in independent math exam evaluations

- Achieved 93% accuracy on objective questions but struggled with visual data interpretation

- Solved problems 33% faster than competitor AIs (4 vs. 6 minutes per question)

- Powered by Alibaba's Tongyi Qianwen-based learning model with billions of training materials