OpenMemory MCP Launches: Local AI Memory Sharing for Seamless Workflows

The AI development landscape has gained a powerful new tool with the release of OpenMemory MCP (Model Context Protocol), an open-source solution for local memory sharing across AI platforms. This innovation allows developers to maintain a single repository of interaction data accessible to multiple AI tools simultaneously.

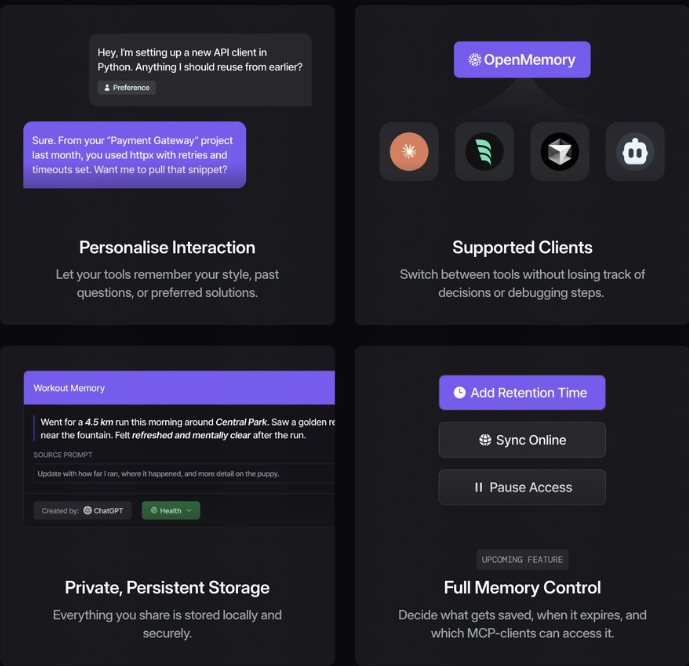

Local Storage Meets Cross-Platform Compatibility At its core, OpenMemory MCP functions as a bridge between disparate AI tools. Unlike cloud-based alternatives, it stores all interaction data—from project requirements to coding preferences—directly on the user's device. This localized approach not only enhances privacy but gives developers complete control over their data.

The system currently supports popular platforms including Claude, Cursor, and Windsurf through its MCP protocol. When a user inputs information into one tool, it becomes immediately available to others in the ecosystem. No more repeating project specifications or preferences across different interfaces.

Technical Architecture and Features Behind the scenes, OpenMemory MCP combines several powerful technologies:

- Qdrant vector databases for efficient memory storage

- Server-Sent Events (SSE) enabling real-time communication

- Metadata tagging (topics, emotions, timestamps) for organized retrieval

- Visual dashboard for memory management and access control

Developers have particularly praised the tool's ability to maintain context across different stages of workflow. Imagine defining technical requirements in Claude Desktop, implementing code in Cursor, and debugging in Windsurf—all without losing the thread of your project.

Practical Applications Shine Early adopters report significant efficiency gains in several scenarios:

- Game designers can modify character attributes across multiple tools with changes propagating instantly

- Development teams maintain consistent coding styles regardless of which platform members use

- Complex projects benefit from persistent context without manual transfer between applications

The fully local operation addresses growing concerns about data privacy in AI development. Unlike some competitors that rely on cloud synchronization, OpenMemory MCP keeps everything on-device while still enabling seamless collaboration.

Challenges and Future Development Current limitations include relatively narrow client compatibility—major platforms like VS Code's GitHub Copilot don't yet support the MCP protocol. The installation process also presents some technical hurdles for less experienced users.

The Mem0 development team has outlined an ambitious roadmap:

- Expanded dashboard functionality

- Multilingual support

- Potential mobile compatibility

- Integration with common workplace tools like Slack and Notion

With over 5,000 GitHub stars already accumulated, OpenMemory MCP appears poised to influence how developers interact with multiple AI tools. Its success may hinge on broader industry adoption of the MCP standard—a challenge the team seems ready to meet head-on.

Key Points

- OpenMemory MCP enables local storage and sharing of AI interaction data across compatible tools

- The solution uses vector databases and real-time communication protocols for efficiency

- Privacy-focused design keeps all data on user devices rather than cloud servers

- Current limitations include limited client support and technical installation requirements

- Future updates aim to expand functionality and ecosystem integration