New UGMathBench Dataset Challenges AI's Math Reasoning Skills

The AI research community has gained a powerful new tool for testing mathematical reasoning capabilities in language models. ModelScope's Magenta community recently unveiled UGMathBench, a comprehensive benchmark dataset designed to push the boundaries of what we know about AI's ability to handle complex mathematical problems.

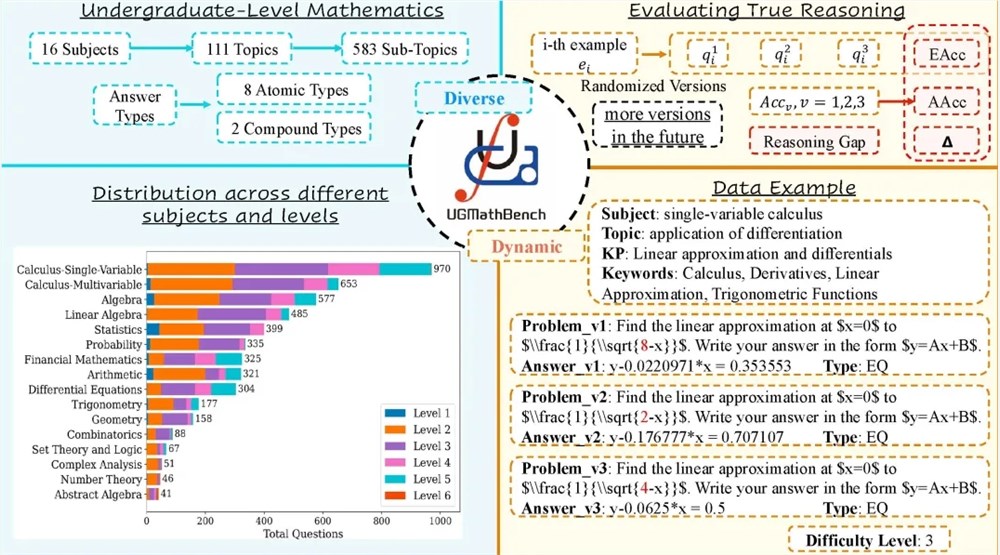

Unlike static evaluation methods that become obsolete as models improve, UGMathBench introduces dynamic testing through three randomized versions of each problem. This approach prevents models from simply memorizing answers while providing researchers with deeper insights into true reasoning capabilities.

The dataset covers 16 undergraduate mathematics subjects, from basic arithmetic to differential equations and probability theory. Its 5,062 carefully curated problems were extracted from an online assignment grading system, ensuring real-world relevance and academic rigor.

Why does this matter? As language models increasingly assist in technical fields, their mathematical reliability becomes crucial. Current benchmarks often fail to distinguish between genuine understanding and pattern recognition. UGMathBench's randomized variations force models to demonstrate flexible thinking rather than relying on rote memorization.

The research team developed three innovative evaluation metrics:

- Effective Accuracy (EAcc): Measures correct answers across all problem variations

- Reasoning Gap (Δ): Assesses consistency when solving differently phrased versions

- Robustness Efficiency (RE): Evaluates adaptability to changing problem parameters

Initial tests on 23 leading language models—including both commercial and open-source options—revealed significant challenges even for the most advanced systems. These findings highlight substantial room for improvement in AI's mathematical reasoning abilities.

"What makes UGMathBench special isn't just its size or scope," explains one researcher involved in the project. "It's how the dynamic nature of the problems forces models to show their work rather than just spit out answers. This gives us unprecedented visibility into how these systems actually think."

The dataset is now publicly available, offering researchers worldwide an opportunity to benchmark their models against this new standard. Developers can access the materials through ModelScope or Hugging Face, along with a detailed technical report on arXiv.

For educators and students, this development signals exciting possibilities. As language models become more mathematically capable, they could transform how we teach and learn complex subjects—but only if we can trust their reasoning processes.

Key Points

- UGMathBench introduces dynamic testing with randomized problem variations

- Covers 5,062 problems across 16 undergraduate mathematics subjects

- Three specialized metrics evaluate different aspects of reasoning ability

- Initial tests show even advanced models struggle with the benchmark

- Publicly available dataset aims to drive progress in mathematical AI