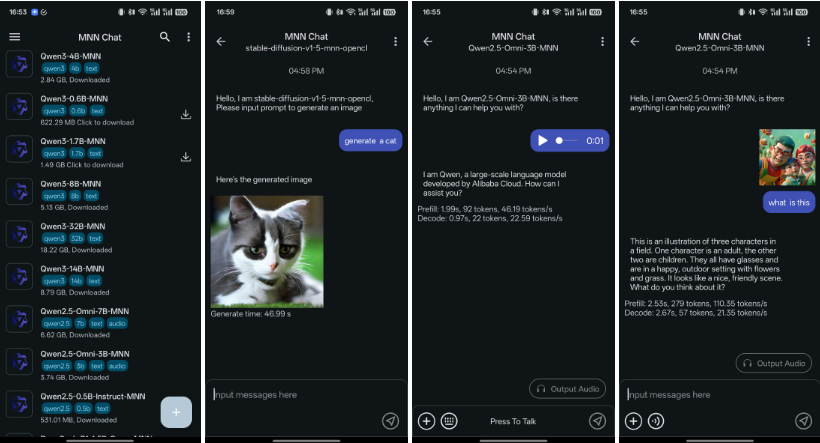

MNN-LLM Android App: Efficient AI Inference Framework

Product Introduction

MNN-LLM revolutionizes mobile AI by offering an efficient framework for running large language models locally. Designed for Android devices and PCs, it tackles high memory usage while delivering blazing-fast inference speeds. Privacy-focused users will appreciate its completely offline operation.

Key Features

- Multi-modal Support: Handles text, image, and audio generation seamlessly

- CPU Optimization: Delivers remarkable speed improvements on mobile devices

- Privacy Protection: All processing occurs locally without cloud dependency

- Model Compatibility: Works with various mainstream AI models

- Interactive Chat: Supports multi-turn conversations with history tracking

- Customizable Settings: Adjust sampling methods and parameters freely

- User-Friendly UI: Clean interface with convenient multi-line input

Product Data

- Benchmark leader in CPU performance tests

- Significant reduction in memory consumption (specific metrics not provided)

- Supports multiple model providers (specific names not listed)