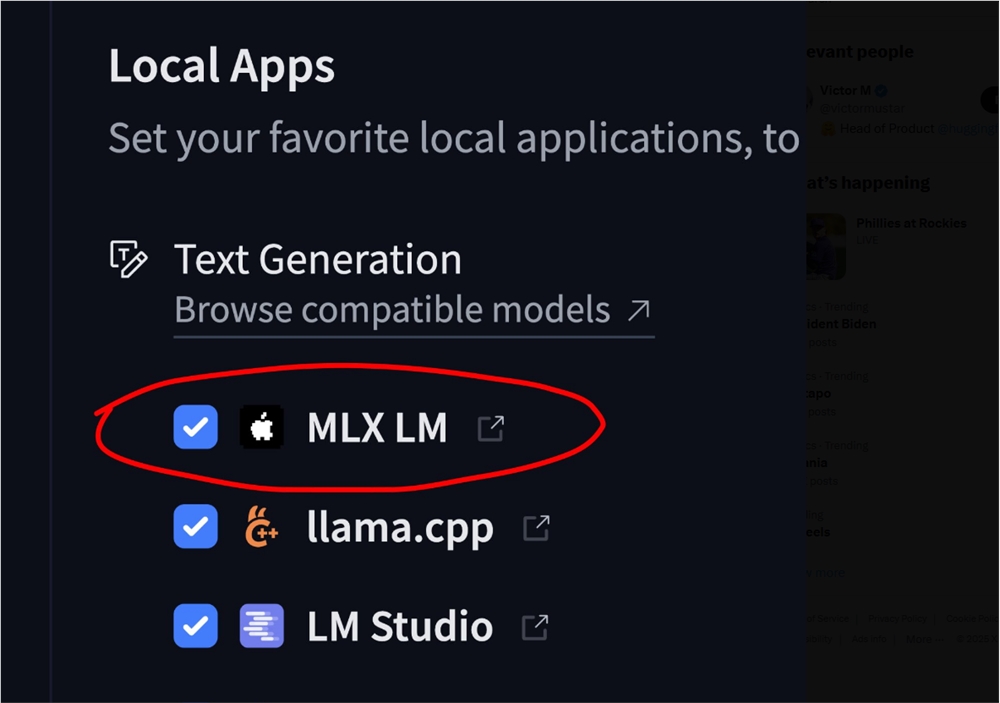

MLX-LM Joins Hugging Face to Supercharge Apple Silicon AI

In a significant advancement for AI development on Apple devices, the MLX-LM framework has been fully integrated into the Hugging Face platform. This breakthrough allows developers using M1 through M4 chips to run more than 4,400 large language models locally at peak performance—eliminating both cloud reliance and tedious conversion processes.

The integration marks a leap forward in localized AI development, offering researchers and engineers unprecedented efficiency. MLX, Apple's specialized machine learning framework, has been fine-tuned to harness the full potential of Apple Silicon's neural engine and Metal GPU capabilities. As its dedicated LLM component, MLX-LM brings this optimized performance directly to language model workflows.

Simplified Model Access Gone are the days of complex conversion steps. The Hugging Face integration means users can now pull models directly from the platform's extensive repository—no intermediary steps required. This streamlined approach cuts setup time dramatically while maintaining optimal performance.

Why This Matters For developers working on Macs, this update removes two major pain points: cloud dependency that introduces latency and privacy concerns, and the technical hurdles of model conversion. The direct compatibility means teams can prototype and deploy faster than ever before.

The framework's optimization for Apple's hardware architecture promises significant speed advantages over generic solutions. Early benchmarks show particularly strong performance gains in transformer-based models commonly used for natural language processing tasks.

Available models can be browsed at: Hugging Face MLX Library

Key Points

- Direct Hugging Face integration eliminates model conversion needs

- Over 4,400 LLMs now run natively on M-series chips

- Full utilization of Apple Silicon's neural engine capabilities

- Significant performance gains for local AI development

- Enhanced privacy by reducing cloud dependency