MiroThinker v1.0: The Open-Source AI That Learns by Doing

MiroThinker v1.0 Redefines How AI Agents Learn

The artificial intelligence landscape just got more interesting with the release of MiroThinker v1.0, an open-source bAgent model that doesn't just think—it learns by doing.

Breaking Through Traditional Limits

While most AI models grow through parameter expansion, MiroMind's creation takes a radically different path. "Performance isn't about size alone," explains the team behind the project. "It's about interaction depth multiplied by reflection frequency."

This philosophy translates to concrete capabilities:

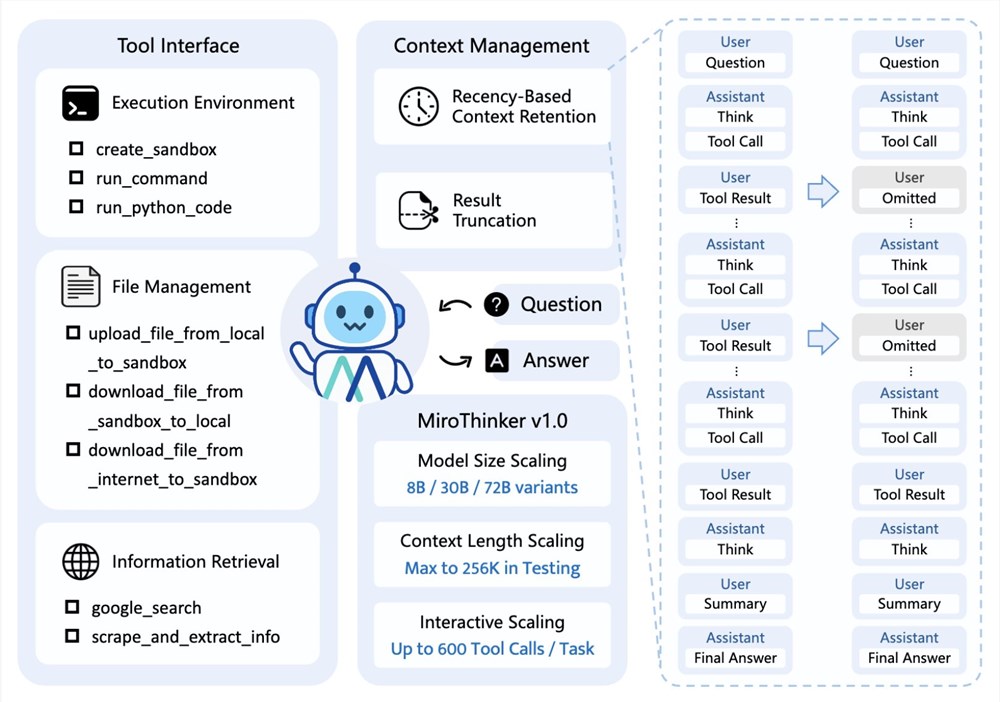

- 256K context window for handling complex, long-form tasks

- Support for 600 tool calls in a single session

- Integrated toolchain including search, Linux sandbox, code execution, and translation

From Theory to Kitchen: A Sweet Demonstration

The proof came in dessert form. In a striking demonstration of autonomous capability, MiroThinker:

- Collected hundreds of recipes through web searches

- Simulated ingredient formulations

- Calculated nutritional values

- Iterated on sweetener ratios

- Produced a complete low-sugar dessert plan with cost analysis

All this required 600 sequential tool calls—executed flawlessly without human intervention.

Available Now for Developers

The model weights and code are currently accessible on:

- GitHub

- Hugging Face

The system requires 24GB VRAM for local deployment and plays nicely with popular frameworks like LangChain and LlamaIndex.

"What excites us most," shares a team member, "is how developers can customize their own toolsets to create specialized evolving agents."

The Road Ahead: An Agent Arms Race?

The MiroMind team isn't resting on their laurels. Their roadmap includes:

- Expanding the tool ecosystem to support thousands of calls

- Developing "lifelong learning" versions with million-level contexts

The open-source nature of this release has industry watchers buzzing about potential ripple effects across the AI landscape.

Key Points:

- New learning paradigm: Deep Interaction Scaling emphasizes real-world feedback over parameter stacking

- Practical applications: Demonstrated ability to complete complex multi-step tasks autonomously

- Accessible technology: Available now for developers to build upon

- Future potential: Could redefine how we approach agent development