MiniCPM-V 4.0: Open-Sourced Multimodal AI Model for Edge Devices

Mingi AI Open-Sources MiniCPM-V 4.0 Multimodal Model

The ModelScope community has announced the open-sourcing of MiniCPM-V 4.0, a groundbreaking multimodal AI model optimized for edge devices. With 4 billion parameters, this model - nicknamed "Moxie Xiaogangpao" - delivers state-of-the-art performance while maintaining efficiency on mobile platforms.

Performance Breakthroughs

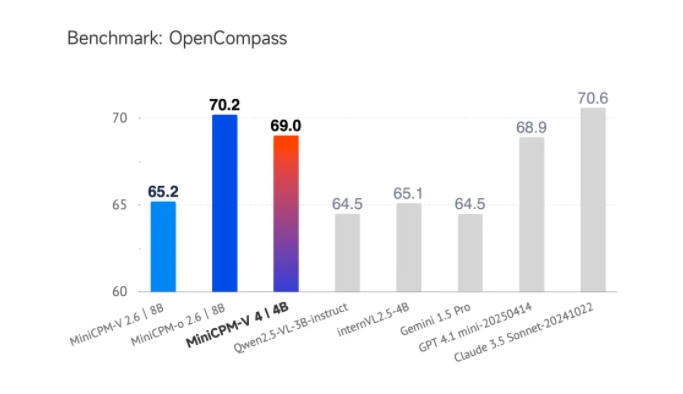

MiniCPM-V 4.0 has achieved top-tier results across multiple benchmarks including:

- OpenCompass

- OCRBench

- MathVista

- MMVet

- MMBench V1.1

The model surpasses competitors like Qwen2.5-VL3B and InternVL2.54B, even rivaling commercial offerings such as GPT-4.1-mini and Claude3.5Sonnet in comprehensive evaluations.

Mobile Optimization

The research team highlights several key advantages for edge deployment:

- 3.33GB VRAM usage (tested on Apple M4 Metal)

- ANE + Metal acceleration for faster first response times

- Stable operation without overheating during prolonged use

- iOS app available for local deployment

"This represents a significant advancement in bringing sophisticated multimodal AI to consumer devices," noted the development team.

Technical Innovations

The model's efficiency stems from:

- Optimized architecture reducing parameters by half compared to previous 8B version

- Enhanced throughput (13,856 tokens/s at 256 concurrent users)

- Progressive scaling of performance with input resolution

The accompanying MiniCPM-V CookBook provides developers with tools for lightweight deployment across various platforms and use cases.

Availability

Developers can access the model through:

The CookBook is available at: GitHub

Key Points:

- First open-source multimodal model optimized for phones

- 50% parameter reduction from previous generation with improved performance

- Sets new benchmarks in OCR and visual reasoning tasks

- Includes complete deployment toolkit for developers