Microsoft Paper Unintentionally Reveals AI Model Parameters

Microsoft Paper Reveals AI Model Parameters

A research paper published by Microsoft on December 26 has unintentionally disclosed the parameter sizes of multiple large language models, including those developed by OpenAI and Anthropic. This revelation has ignited discussions surrounding model architecture and technical capabilities within the industry, particularly as it relates to medical AI evaluation.

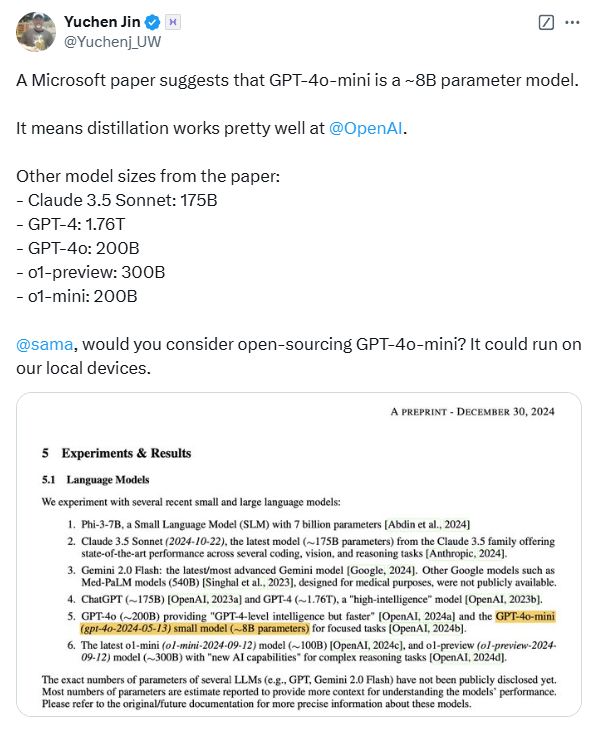

According to the findings detailed in the paper, OpenAI's o1-preview model boasts approximately 300 billion parameters, while its GPT-4o model contains around 200 billion parameters. Notably, the GPT-4o-mini variant is reported to have only 8 billion parameters. This contrasts sharply with Nvidia's previous claims that the GPT-4 model utilizes a 1.76 trillion MoE architecture. The paper also indicated that Anthropic's Claude3.5Sonnet model has about 175 billion parameters.

This is not the first instance of Microsoft revealing model parameters in its publications. In October, the company disclosed that the GPT-3.5-Turbo model consists of 20 billion parameters, although this information was later removed in an update. Such recurring disclosures have led industry insiders to speculate whether these leaks are intentional or merely oversight.

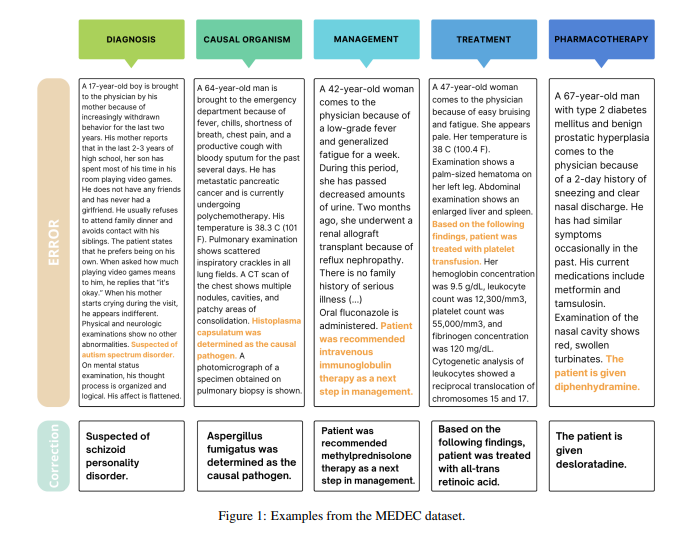

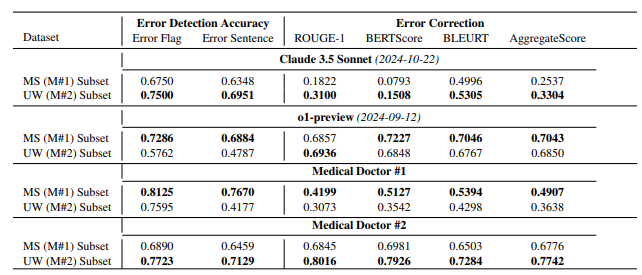

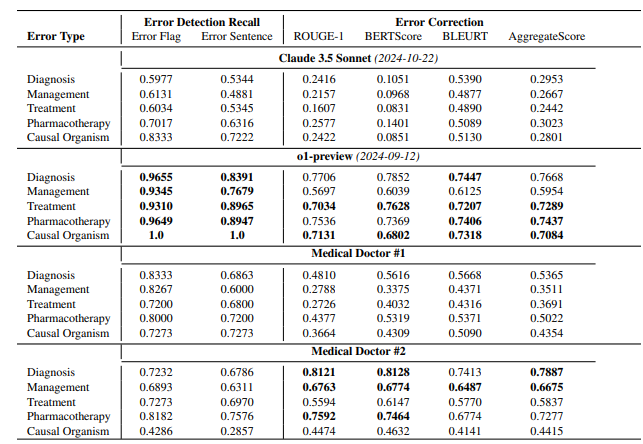

The primary focus of the recent paper is to introduce a medical domain benchmark test known as MEDEC. The research team analyzed 488 clinical notes from three hospitals across the United States to assess various models' capabilities in identifying and correcting errors in medical documents. Results indicated that Claude3.5Sonnet outperformed its competitors in error detection, achieving a score of 70.16.

The authenticity of the disclosed data has sparked significant debate within the industry. Some experts argue that if Claude3.5Sonnet can perform effectively with fewer parameters, it would underscore Anthropic's technical prowess. Conversely, other analysts suggest that certain parameter estimates might be aligned with model pricing structures.

Interestingly, while the paper estimates parameters for several mainstream models, it notably omits specific details regarding Google's Gemini. Analysts speculate that this omission may stem from Gemini's use of TPUs rather than Nvidia GPUs, complicating accurate estimations based on token generation speeds.

As OpenAI increasingly downplays its commitment to open-source initiatives, the disclosure of core information such as model parameters remains a focal point of industry interest. This unexpected leak has prompted renewed discussions regarding AI model architecture, technological pathways, and commercial competition within the sector.

References:

- Microsoft’s recent paper reveals parameter sizes of various AI models, including OpenAI's and Anthropic's.

- The paper introduces a benchmark test for medical AI, MEDEC, using clinical notes for evaluation.

- Discrepancies in parameter disclosures could indicate ongoing debates about model performance and architectural efficiency.