Microsoft Open Sources Phi-4, Surpassing GPT-4o and Llama-3.1

Microsoft has unveiled Phi-4, a small yet powerful language model with just 14 billion parameters, now available on the Hugging Face platform. Despite its compact size, Phi-4 has shown exceptional performance, outperforming several prominent models, including OpenAI's GPT-4o and open-source models like Qwen2.5 and Llama-3.1.

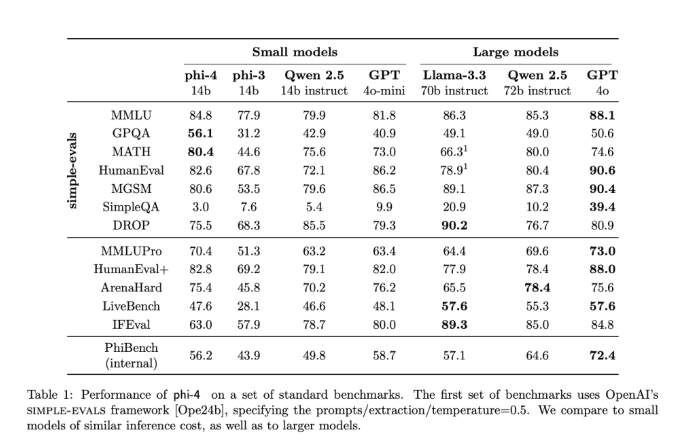

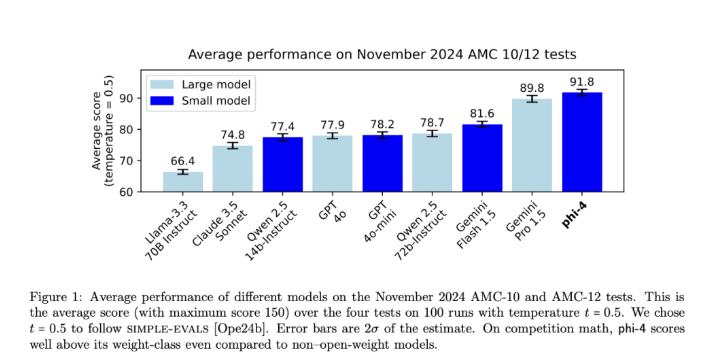

In rigorous testing, Phi-4 has excelled in challenges such as the American Mathematics Competition (AMC), where it scored 91.8, surpassing competitors like Gemini Pro1.5 and Claude3.5Sonnet. The model also performed strongly in the MMLU test, achieving an impressive 84.8 score, demonstrating its advanced reasoning and mathematical problem-solving abilities.

Phi-4 differentiates itself by using synthetic data generation techniques, including multi-agent prompting, instruction reversal, and self-correction methods. These innovations enhance its reasoning capabilities, enabling Phi-4 to handle complex tasks with ease. Unlike many models that primarily rely on organic data, Phi-4 generates high-quality synthetic data to optimize performance.

The model is based on a decoder-only Transformer architecture and supports context lengths of up to 16k, making it capable of processing large input data efficiently. During pre-training, Phi-4 was exposed to approximately 1 trillion tokens, a combination of synthetic and carefully curated organic data, ensuring robust performance across benchmarks such as MMLU and HumanEval.

Phi-4’s advantages extend beyond its size and performance. It is designed for efficiency, making it compatible with consumer-grade hardware. Its reasoning capabilities in STEM-related tasks, such as mathematics and science, are particularly impressive compared to both smaller and larger models. Additionally, Phi-4 can be fine-tuned using diverse synthetic datasets to tailor its abilities to specific domain needs.

The technical innovations behind Phi-4 include advanced data generation techniques like multi-agent prompting and self-correction, which improve its ability to reason and solve problems. Furthermore, the model leverages post-training methods such as rejection sampling and Direct Preference Optimization (DPO), optimizing its decision-making abilities and performance on complex reasoning tasks. The inclusion of key token search (PTS) helps Phi-4 identify critical decision-making points, enhancing its accuracy and reasoning.

Phi-4’s open-source release marks a significant step forward in AI development. Available for download on Hugging Face, the model is licensed under the MIT License, allowing for commercial use. This open policy has drawn considerable attention from the AI community, with developers and enthusiasts praising Phi-4 for its performance and potential. Hugging Face's official social media account even referred to it as "the best 14B model ever."

Model link: https://huggingface.co/microsoft/phi-4

Key Points

- Microsoft’s Phi-4 model, with only 14 billion parameters, surpasses major models like GPT-4o and Llama-3.1 in performance tests.

- Phi-4 excels in math and reasoning, scoring high in tests like AMC and MMLU.

- The model is open-source and licensed for commercial use, attracting developers and AI enthusiasts.