Meta Unveils SPIRIT LM: An AI Model for Emotional Expression

Meta Unveils SPIRIT LM: An AI Model for Emotional Expression

Meta AI has announced the open-source release of SPIRIT LM, a foundational multimodal language model designed to blend text and speech seamlessly. This development opens new avenues for applications involving both audio and textual data.

Overview of SPIRIT LM

SPIRIT LM is built upon a pre-trained text model comprising 7 billion parameters. This model has been enhanced through continuous training on both text and speech units, allowing it to understand and generate text akin to other large text models. Notably, it can also handle speech, enabling it to mix text and speech effectively for various applications. For example, SPIRIT LM can be utilized for:

- Speech Recognition: Converting spoken language into text.

- Speech Synthesis: Transforming written text into spoken language.

- Speech Classification: Assessing the emotional tone conveyed in speech.

Emotional Expression Capabilities

One of SPIRIT LM's standout features is its ability to convey emotional expression. The model can discern and generate a range of speech tones and styles, resulting in a voice that sounds more human and emotive. This advancement means that the output from SPIRIT LM resembles real human speech, moving away from the typical cold and robotic tones found in many AI systems.

To optimize the emotional expressiveness of the AI, Meta's researchers have created two versions of SPIRIT LM:

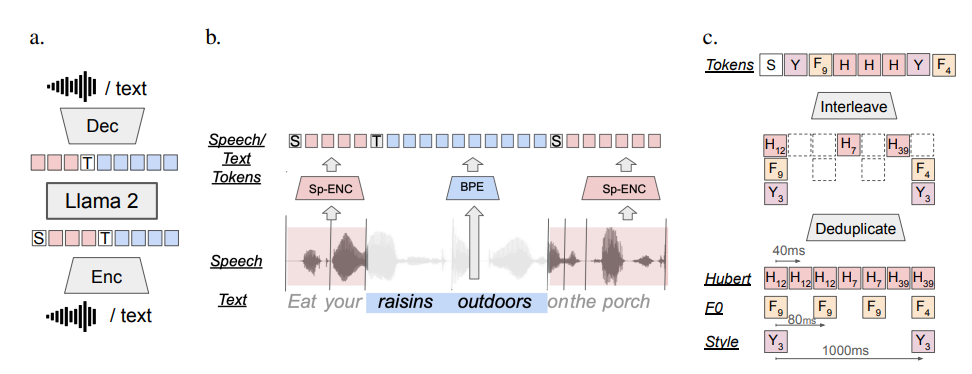

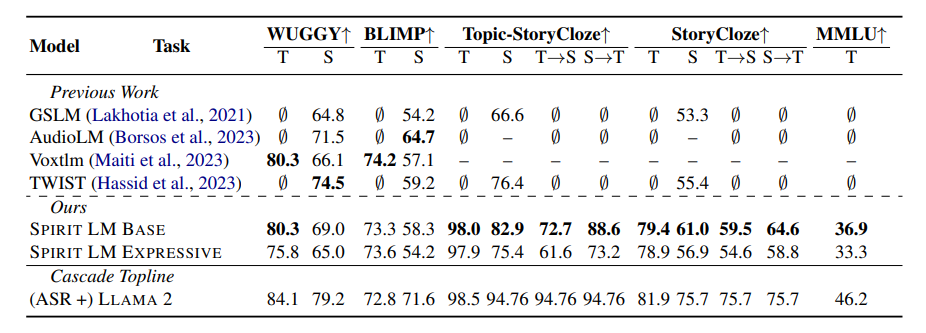

- Base Version (BASE): Focuses primarily on the phonetic aspects of speech.

- Expressive Version (EXPRESSIVE): Incorporates phonetic information along with tone and style, enabling a richer and more vivid vocal output.

Training Methodology

The development of SPIRIT LM leverages Meta's powerful LLAMA2 text model. Researchers employed a unique interleaved training approach, feeding a large dataset consisting of both text and speech. This method allows LLAMA2 to learn the patterns of text and speech simultaneously, crucial for achieving the model's multimodal capabilities.

Benchmarking Emotional Expression

To evaluate SPIRIT LM's proficiency in emotional expression, researchers established a new benchmark known as the Speech-Text Emotion Preservation Benchmark (STSP). This benchmark features various prompts that express differing emotions, assessing the model's ability to recognize and generate text and speech that accurately reflect those emotions. Preliminary results indicate that the Expressive Version of SPIRIT LM excels in emotion retention, positioning it as the first AI model capable of achieving cross-modal emotion preservation.

Future Improvements

Despite its advancements, Meta's researchers acknowledge that SPIRIT LM has multiple areas for enhancement. For example, the model currently supports only English and will need to expand its language capabilities. Additionally, the model size is considered suboptimal, necessitating further growth to improve performance.

Conclusion

SPIRIT LM represents a significant breakthrough for Meta in the realm of artificial intelligence, heralding a future where AI can engage in emotionally expressive interactions. As developments progress, it is anticipated that SPIRIT LM will inspire innovative applications, enabling AI to converse in a more relatable and human-like manner. This evolution could facilitate more natural and friendly interactions between humans and AI systems.

For more information, visit the project page at SPIRIT LM Project and access the research paper here.

Key Points

- SPIRIT LM is an open-source multimodal language model from Meta AI.

- The model excels in emotional expression, simulating human-like speech.

- Two versions of SPIRIT LM focus on different aspects of speech.

- Future improvements include expanding language support and model size.