Meta's MobileLLM-R1 Fuels Enterprise Shift to Small AI

Meta Introduces MobileLLM-R1 Amid Enterprise AI Downsizing Trend

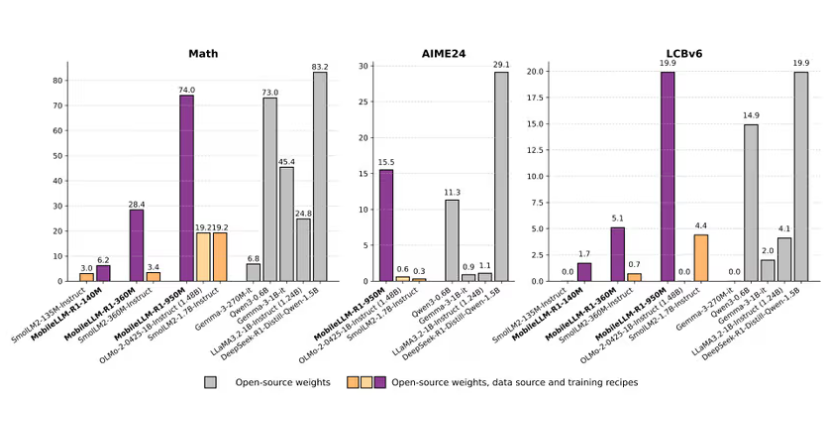

Meta has unveiled its MobileLLM-R1 series, marking a significant development in the growing "small AI" movement targeting enterprise applications. The new models come in three parameter sizes (140M, 360M, and 950M) and feature specialized optimizations for mathematical operations, coding tasks, and scientific reasoning.

The Rise of Small Language Models

Traditionally, AI capabilities were associated with massive parameter counts reaching hundreds of billions. However, enterprises increasingly recognize practical limitations:

- Unpredictable costs from cloud-based large models

- Limited control over underlying systems

- Privacy concerns with third-party dependencies

The MobileLLM-R1 series addresses these challenges through its "deep but thin" architecture design. This approach enables complex task execution on resource-constrained devices while maintaining competitive performance benchmarks.

Performance and Licensing Considerations

The 950M parameter version demonstrates slightly better performance than Alibaba's Qwen3-0.6B on MATH benchmark tests and excels in LiveCodeBench coding evaluations. These characteristics make MobileLLM-R1 particularly suitable for local code assistance integration within development tools.

However, potential adopters should note Meta's current non-commercial FAIR license, which restricts direct commercial deployment. This positions MobileLLM-R1 primarily as:

- A research reference model

- An internal tool framework

- A blueprint for customized implementations

Competitive Landscape in Small AI

The small language model market features several notable alternatives: | Model | Parameter Count | Key Advantage | |-------|----------------|--------------| | Google Gemma3 | 270M | Ultra-efficient performance | | Alibaba Qwen3-0.6B | 600M | Unrestricted commercial use | | Nvidia Nemotron-Nano | Varies | Customizable reasoning controls |

The industry shift mirrors broader technological trends toward modular solutions - similar to software's migration from monolithic architectures to microservices.

Future Outlook: Balanced AI Ecosystems

Industry analysts observe that small models don't replace their larger counterparts but rather complement them:

- Large models continue refining training datasets

- Small models handle specialized enterprise applications

- Hybrid approaches emerge combining both paradigms This evolution suggests a more sustainable and practical trajectory for applied artificial intelligence.

The MobileLLM-R1 is available via Hugging Face.

Key Points:

🌟 Specialized Optimization: MobileLLM-R1 targets math, coding, and scientific applications with three parameter variants 🔍 Enterprise Advantages: Offers improved cost predictability, privacy controls, and system integration compared to LLMs 🚀 Industry Shift: Reflects growing preference for modular small-model solutions over monolithic large-language approaches