Meta's AU-Nets: A New Era in Text Processing

Meta Introduces AU-Nets to Transform Text Processing

In the rapidly evolving field of large language models (LLMs), text data segmentation has emerged as a critical area of research. Traditional methods like Byte Pair Encoding (BPE) rely on fixed units and static vocabularies, which often struggle with low-resource languages or complex character structures. Meta's research team has now introduced AU-Nets, a groundbreaking architecture designed to overcome these limitations.

The AU-Net Architecture

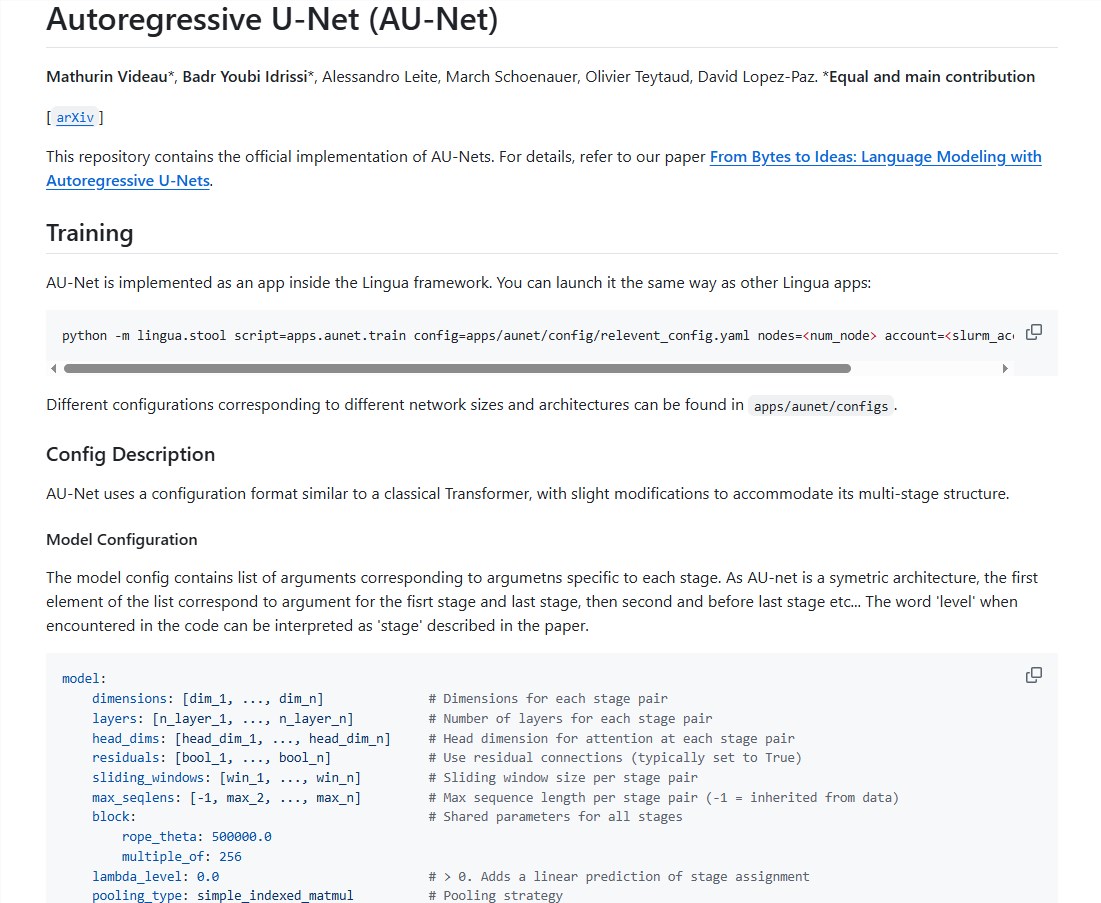

AU-Nets employ a self-regressive U-Net structure, enabling direct learning from raw bytes. This approach allows the model to flexibly combine bytes into words, phrases, and even multi-word sequences, creating dynamic representations at multiple levels. The design is inspired by the U-Net architecture used in medical image segmentation, featuring unique contraction and expansion paths.

Contraction Path: Compressing Information

The contraction path compresses input byte sequences into higher-level semantic units. This process occurs in stages:

- First Stage: Direct processing of raw bytes with a limited attention mechanism.

- Second Stage: Pooling at word boundaries to abstract byte information into word-level semantics.

- Third Stage: Pooling between every two words to capture broader semantic contexts.

Expansion Path: Restoring Details

The expansion path gradually restores compressed information using a multilinear upsampling strategy. This ensures high-level information is seamlessly integrated with local details. Skip connections are also employed to preserve critical local details during restoration.

Inference and Efficiency

During inference, AU-Nets use a self-regressive generation mechanism to produce coherent and accurate text while maintaining efficiency. This architecture not only enhances performance but also offers unprecedented flexibility for diverse linguistic tasks.

Key Points

- Dynamic Representation: AU-Nets dynamically combine bytes into multi-level sequences.

- Semantic Integration: Contraction and expansion paths ensure effective fusion of macro and local details.

- Efficient Generation: Self-regressive mechanisms improve inference speed and accuracy.

For more details, visit the open-source repository.