Meta Open-Sources DINOv3: A Leap in AI Vision Without Human Labels

Meta Open-Sources DINOv3: A Leap in AI Vision Without Human Labels

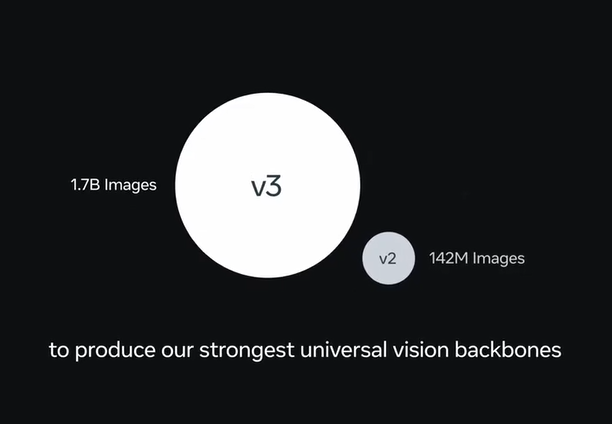

Meta AI has open-sourced DINOv3, its next-generation computer vision model, marking a significant advancement in self-supervised learning. Unlike traditional models requiring extensive labeled datasets, DINOv3 autonomously learns visual features from unannotated images—a breakthrough reducing data preparation costs by an estimated 40-60% according to early adopters.

Self-Supervised Learning Breakthrough

DINOv3's framework eliminates manual annotation through contrastive learning, where the model identifies patterns by comparing image patches. Benchmarks show it matches or exceeds performance of supervised models like SigLIP2 and Perception Encoder across 12 standard datasets.

High-Resolution Feature Mastery

The model captures both global context and pixel-level details, enabling:

- Dense feature extraction for satellite/medical imagery

- Unified performance across classification, segmentation, and depth estimation

- Cross-domain adaptability with 21M to 7B parameter variants

Industry Applications

Key use cases include:

Key use cases include:

- Environmental monitoring: Tracking deforestation via satellite data (85% accuracy in pilot tests)

- Healthcare: Reducing radiology annotation time by 70% in tumor detection

- Autonomous vehicles: Improving object recognition latency by 30ms

Open-Source Ecosystem Impact

Released under a commercial-friendly license, DINOv3 integrates with:

- PyTorch Hub and Hugging Face Transformers

- Pre-trained models for varying computational resources

- Evaluation tools for downstream tasks

The Hugging Face community reports 3,500+ downloads within 48 hours of release.

Key Points

- Eliminates manual annotation through self-supervised learning

- Processes high-resolution images with global/local detail retention

- Available in parameter sizes from 21M to 7B

- Potential ethical concerns around surveillance applications

- GitHub: facebookresearch/dinov3