Meta Open-Sources DINOv3: A Game-Changer in AI Vision

Meta Open-Sources DINOv3: A Leap in Self-Supervised AI Vision

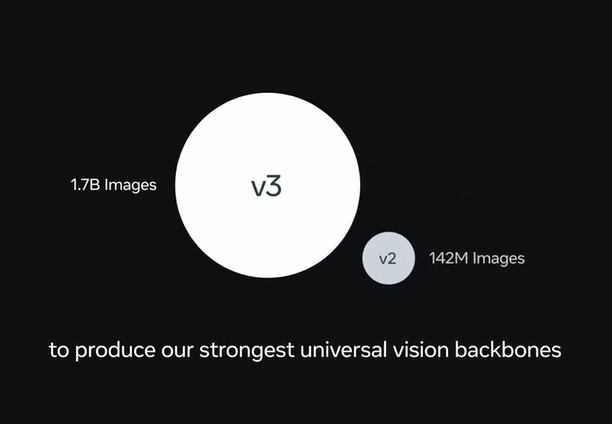

Meta AI has officially open-sourced DINOv3, its next-generation general-purpose image recognition model, marking a significant milestone in computer vision technology. Unlike traditional models that rely on manually annotated data, DINOv3 leverages self-supervised learning to autonomously extract features from unlabeled images, reducing data preparation costs and expanding its applicability.

Self-Supervised Learning: A Paradigm Shift

The core innovation of DINOv3 lies in its ability to train without manual annotations. Traditional models require vast amounts of labeled data, but DINOv3 achieves comparable or superior performance to leading models like SigLIP2 and Perception Encoder by learning directly from raw images. This breakthrough is particularly valuable in scenarios where data is scarce or annotation is prohibitively expensive.

High-Resolution Feature Extraction

DINOv3 excels in capturing both global and local details within images, enabling high-quality dense feature representations. This capability supports a wide range of visual tasks, including:

- Image classification

- Object detection

- Semantic segmentation

- Image retrieval

- Depth estimation

The model’s versatility extends beyond standard photos to complex data types like satellite and medical images, making it a powerful tool for cross-domain applications.

Broad Industry Applications

DINOv3’s adaptability opens doors to transformative use cases across industries:

- Environmental Monitoring: Analyzing satellite imagery for forest coverage and land-use changes.

- Autonomous Driving: Enhancing object detection and scene understanding for safer navigation.

- Healthcare: Assisting in lesion detection and organ segmentation for improved diagnostics.

- Security Surveillance: Enabling advanced behavior analysis and person identification.

The open-source release empowers small businesses and research institutions to leverage state-of-the-art AI without prohibitive costs.

Open-Source Ecosystem Integration

Meta has made DINOv3 accessible under a commercial-friendly license, providing:

- Complete training code and pre-trained models (21M to 7B parameters).

- Support for PyTorch Hub and Hugging Face Transformers.

- Evaluation code and example notebooks for rapid adoption. Developers have praised the model’s ease of integration and performance within the Hugging Face ecosystem.

Ethical Considerations

While DINOv3’s potential is vast, experts caution about risks such as privacy violations and algorithmic bias. Addressing these ethical challenges will be critical as the technology proliferates.

Key Points:

- No Manual Annotations Needed: DINOv3 trains via self-supervised learning, reducing reliance on labeled data.

- High-Resolution Features: Captures both global context and fine-grained details.

- Cross-Domain Versatility: Applicable to medical imaging, autonomous driving, and more.

- Open-Source Access: Lowers barriers for developers with pre-trained models and tutorials.

- Ethical Vigilance: Requires careful deployment to mitigate privacy and bias concerns.

The release of DINOv3 underscores Meta’s commitment to advancing open-source AI while setting a new standard for visual intelligence.