MemSET Unveils MiniCPM 4.0: 220x Faster Edge AI Model

MemSET Intelligence has made waves in the AI industry with the launch of its MiniCPM 4.0 series, a groundbreaking advancement in edge-side large language models. Released on June 6, these models promise to redefine what's possible for on-device AI applications.

The series introduces two standout variants: an 8B Lightning Sparse Edition that leverages innovative sparse architecture, and a compact 0.5B version that punches above its weight class. Together, they represent significant progress in speed, efficiency, and practical deployment capabilities.

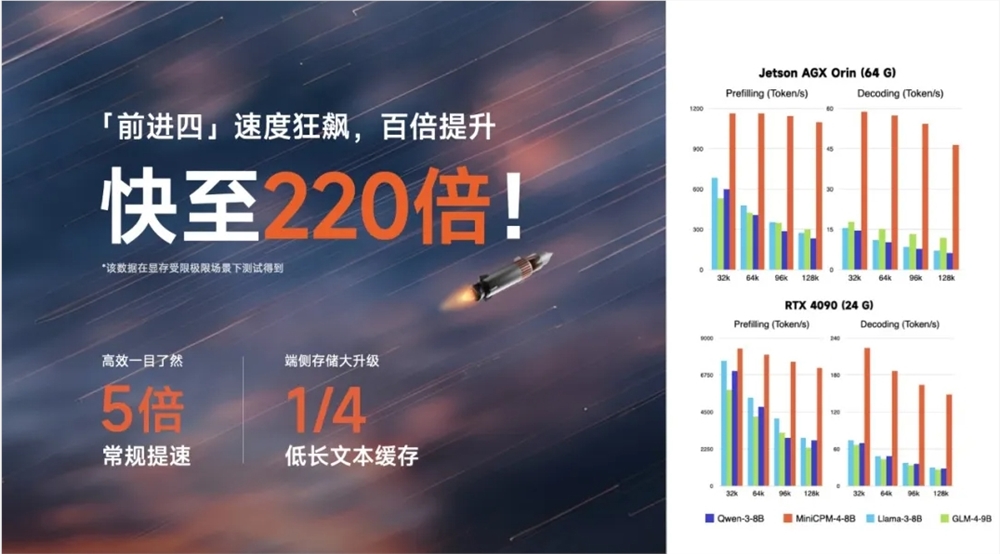

Performance Breakthroughs The most eye-catching improvement comes in processing speed. MiniCPM 4.0 achieves up to 220 times faster performance in extreme cases, with more typical scenarios seeing a fivefold boost. This leap forward stems from system-level sparse innovations that optimize processing layer by layer.

A clever dual-frequency shifting technology allows the model to dynamically switch between sparse and dense attention mechanisms based on text length. This not only accelerates long-text processing but slashes storage needs - requiring just 25% of the cache space compared to similar models like Qwen3-8B.

Efficiency Innovations MemSET has delivered what it calls "industry-first full open-source system-level context sparsity" technology. The models achieve extreme acceleration even at just 5% sparsity rates, thanks to optimizations spanning architecture, system design, reasoning layers, and data handling.

Performance metrics tell an impressive story. The tiny 0.5B version delivers double the capability of larger models while using just 2.7% of typical training costs. Meanwhile, the 8B sparse variant matches or surpasses competitors like Qwen3 and Gemma312B with only 22% of their training expenditure.

Practical Deployment Advantages For developers implementing edge AI solutions, MiniCPM 4.0 offers compelling advantages:

- Integration with MemSET's proprietary CPM.cu ultra-fast inference framework

- Advanced speculative sampling techniques

- Innovative model compression and quantization

- Optimized endpoint deployment architecture

These combine to reduce model sizes by 90% while maintaining high-speed performance from start to finish of the inference process.

The models already support major chip platforms including Intel, Qualcomm, MediaTek, and Huawei Ascend processors. Compatibility with multiple open-source frameworks further extends their potential applications across devices.

Developers can access the models through:

Key Points

- MiniCPM 4.0 delivers up to 220x faster processing in edge deployments

- Dual-frequency shifting enables dynamic optimization for different text lengths

- Requires just 25% of cache storage compared to similar models

- Tiny 0.5B version outperforms larger models at fraction of training cost

- Already compatible with major chip platforms including Intel and Qualcomm