Japan's Shisa V2 405B AI Model Outperforms GPT-4 in Japanese Language Tasks

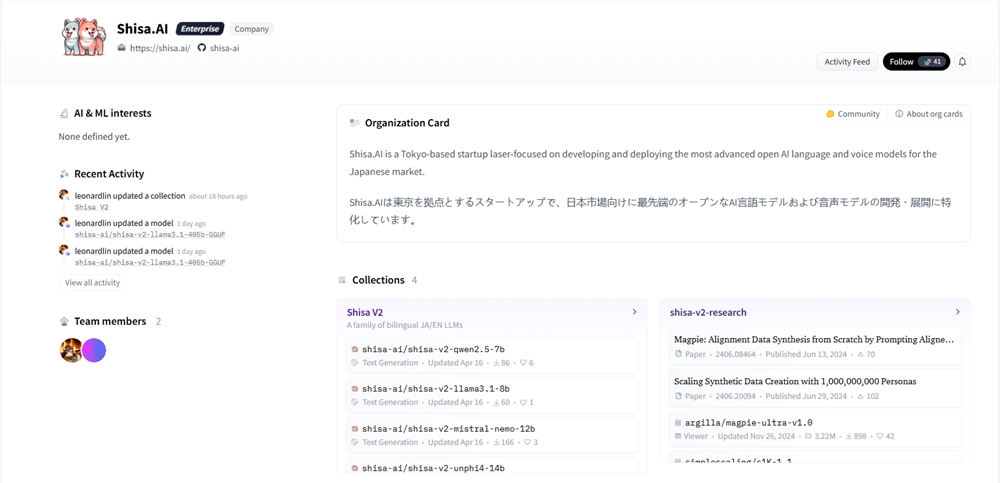

Tokyo-based AI startup Shisa.AI has made waves in the artificial intelligence community with the release of its Shisa V2 405B model, an open-source large language model that outperforms GPT-4 in Japanese language tasks while maintaining robust English processing capabilities.

Breakthrough Performance in Japanese AI Built on the Llama3.1 architecture, the Shisa V2 405B model has been hailed as "the strongest large language model ever trained in Japan." Benchmark tests reveal it not only exceeds GPT-4 and GPT-4 Turbo in Japanese language tasks but also matches the performance of cutting-edge models like GPT-4o and DeepSeek-V3 for Japanese applications.

Optimized for Japanese Processing Unlike previous approaches that relied on costly continuous pre-training, Shisa.AI focused on refining the post-training process using synthetic data. Their proprietary dataset, ultra-orca-boros-en-ja-v1, has become one of the most powerful bilingual Japanese-English resources available. The company has made this dataset freely accessible under the Apache2.0 license, providing valuable tools for global developers working with Japanese language models.

Scalable Model Architecture The Shisa V2 series offers models ranging from 7B to 405B parameters, catering to everything from mobile devices to high-performance computing systems. These models excel in specialized tasks including Japanese grammar processing, role-playing scenarios, and translation. Interestingly, the 405B version incorporates limited Korean and traditional Chinese data during training, enhancing its multilingual capabilities.

Open-Source Commitment Drives Innovation Shisa.AI has taken an unusually transparent approach by publishing training logs on the Weights and Biases platform. The models were developed using an AWS SageMaker 4-node H100 cluster combined with technologies like Axolotl, DeepSpeed, and Liger Kernel. The company plans to further contribute to the AI community by open-sourcing its Japanese-specific benchmarking tools.

This development marks a significant milestone for Japan's position in global AI research. For developers working with complex Japanese language applications, the Shisa V2 series presents a compelling alternative to mainstream models.

Key Points

- Shisa V2 405B outperforms GPT-4 in Japanese language benchmarks while maintaining strong English capabilities

- The model uses innovative synthetic data techniques rather than expensive pre-training methods

- Available in parameter sizes from 7B to 405B for diverse application needs

- Includes multilingual support with Korean and traditional Chinese data elements

- Complete training methodology and datasets released as open-source resources