Jan-v1 AI Model Challenges Perplexity Pro with Local Processing

Open-Source Breakthrough: Jan-v1 Emerges as Perplexity Pro Competitor

The AI development community has welcomed a significant new contender with the release of Jan-v1, an open-source model fine-tuned from Alibaba Cloud's Qwen3-4B-Thinking architecture. Demonstrating 91% accuracy on SimpleQA benchmarks, this locally-operable solution presents a compelling alternative to commercial offerings like Perplexity Pro.

Performance Benchmarks and Technical Specifications

Key advantages of Jan-v1 include:

- 256K token context window (expandable to 1M via YaRN technology)

- 4GB VRAM requirement for local operation

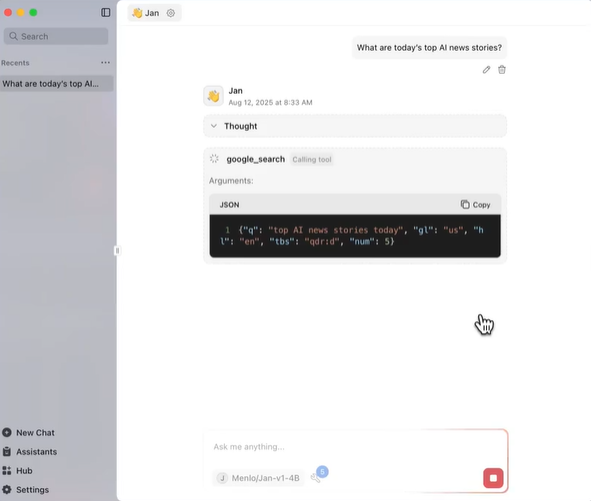

- Specialized optimization for multi-step reasoning and tool integration

The model's dual-mode architecture features distinct "thinking" and "non-thinking" modes, with the former generating structured reasoning traces for analytical transparency. This proves particularly valuable for academic research and complex problem-solving scenarios.

Privacy-Focused Architecture

Unlike cloud-dependent alternatives, Jan-v1 operates entirely on local hardware while maintaining competitive performance. Developers highlight several privacy benefits:

- No data transmission to external servers

- Reduced latency and outage risks

- Flexible deployment options including vLLM and llama.cpp

The recommended configuration uses temperature 0.6 and top_p 0.95 for optimal output quality.

Community Response and Future Development

Released under Apache 2.0 license, Jan-v1 has sparked enthusiastic discussion within developer circles. Early adopters praise its:

- Efficiency in low-resource environments

- Transparent reasoning processes

- Integration with Jan App ecosystem

Some community members note potential limitations with extremely complex tasks, though the open-source nature allows for ongoing improvements through community contributions.

Key Points

- 🚀 91% SimpleQA accuracy surpasses many commercial alternatives

- 🔒 Full local operation enhances data privacy and reduces costs

- 🧠 Dual-mode reasoning enables transparent analytical processes

- 🌐 Apache 2.0 license fosters community-driven development

The model is available at: Hugging Face Repository