Introducing HelloMeme: A Leap in Expression Transfer Technology

Introduction to HelloMeme

Recently, a research team has launched a groundbreaking AI framework named HelloMeme, designed to facilitate the high-accuracy transfer of facial expressions from one individual in a scene to another individual in a different setting.

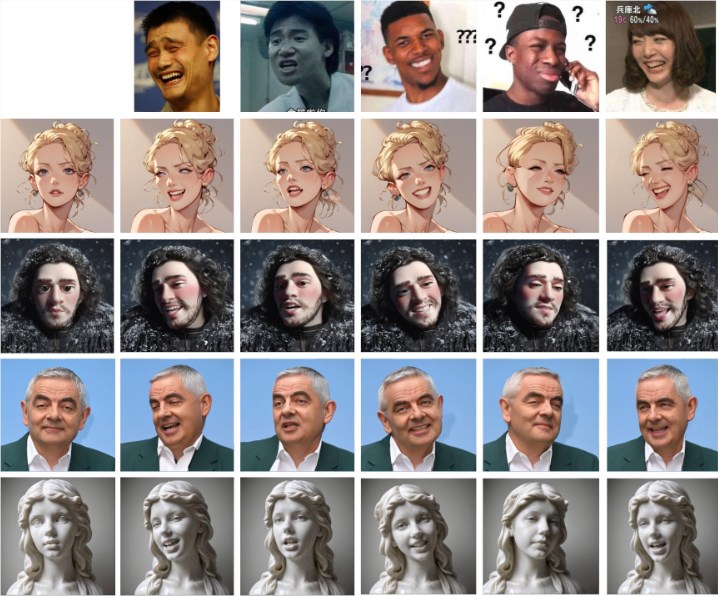

As depicted in the accompanying image, users can input an expression image (first row), allowing detailed facial expressions to be transferred to other images of different people.

Unique Network Structure

The core functionality of HelloMeme is rooted in its unique network structure. This framework is capable of extracting features from each frame of a driving video, which are then processed through the HMControlModule. This workflow enables the generation of smooth video footage. However, initial outputs exhibited flickering issues between frames, detracting from the overall viewing experience. To combat this, the team introduced the Animatediff module, which enhanced video continuity, though it slightly compromised fidelity.

To resolve this fidelity issue, the researchers further optimized the Animatediff module, ultimately achieving a balance between improved video continuity and high-quality image output.

Enhanced Video Editing Capabilities

In addition to its primary function, the HelloMeme framework provides robust support for facial expression editing. By integrating ARKit Face Blendshapes, users can effortlessly control the facial expressions of characters within the generated videos. This capability significantly enriches the emotional depth of video content, allowing creators to produce videos that convey specific emotions and expressions effectively.

Technical Compatibility

From a technical standpoint, HelloMeme employs a hot-swappable adapter design based on SD1.5. This design's primary advantage is that it preserves the generalization capability of the T2I (text-to-image) model, ensuring that any stylized model developed on the SD1.5 framework can be easily integrated with HelloMeme. This feature opens up numerous possibilities for diverse creative applications.

The research team also discovered that the integration of the HMReferenceModule significantly enhances fidelity during video generation. This advancement allows for the production of high-quality videos with fewer sampling steps, thereby improving generation efficiency and paving the way for real-time video creation.

Comparative Analysis

Comparative analysis with other methodologies indicates that HelloMeme's expression transfer capabilities produce results that are more natural and closely aligned with the original expressions, enhancing the overall viewer experience.

Key Points

- HelloMeme achieves both smoothness and high image quality in video generation through its unique network structure and the Animatediff module.

- The framework supports ARKit Face Blendshapes, allowing users to flexibly control character facial expressions and enrich video content.

- It employs a hot-swappable adapter design, ensuring compatibility with other models based on SD1.5, providing greater flexibility for various creations.