InternLM Unveils Lightweight Multimodal AI Model with 8B Parameters

InternLM's New Multimodal AI Model Breaks Barriers in Scientific Research

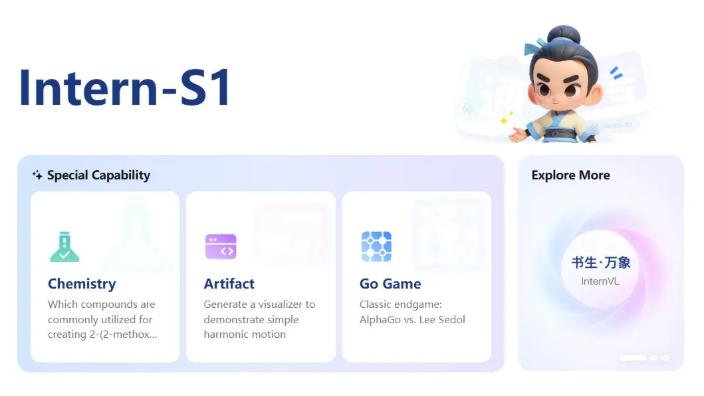

The InternLM team has officially released Intern-S1-mini, a groundbreaking open-source multimodal reasoning model featuring just 8 billion parameters. This lightweight yet powerful system combines the advanced Qwen3-8B language model with the 0.3B visual encoder InternViT, creating a versatile tool for complex data processing.

Scientific Breakthrough Capabilities

Trained on over 5 trillion tokens of data—including 2.5 trillion tokens from specialized scientific fields—Intern-S1-mini demonstrates remarkable abilities:

- Interpretation of complex molecular formulas and protein sequences

- Effective synthesis path planning

- Processing of text, image, and video inputs simultaneously

The model's architecture enables seamless integration between linguistic understanding and visual processing, making it particularly valuable for interdisciplinary research applications.

Benchmark Dominance

Official test results reveal Intern-S1-mini's superior performance across multiple metrics:

- ChemBench: 76.47 score (chemistry applications)

- MatBench: 61.55 score (materials science)

- ProteinLMBench: 58.47 score (biological research)

The model also outperforms competitors in standardized evaluations including MMLU-Pro, MMMU, GPQA, and AIME2024/2025 test suites.

Unique User Experience Features

Intern-S1-mini introduces an innovative "thinking mode" activated by default. Users can toggle this feature with a simple command (enable_thinking), allowing for:

- Enhanced interactivity during complex problem-solving

- More transparent reasoning processes

- Flexible adaptation to different use cases

This design philosophy emphasizes both performance and user accessibility in scientific applications.

Future Implications for AI Research

The release positions Intern-S1-mini as a potentially transformative tool for:

- Academic researchers needing multimodal analysis capabilities

- Pharmaceutical companies developing new compounds

- Materials scientists exploring novel substances Its lightweight architecture makes it particularly suitable for deployment scenarios where computational resources are limited.

Key Points:

- Combines Qwen3-8B language model with InternViT visual encoder in compact 8B parameter package

- Specialized training on 2.5T scientific tokens enables breakthrough performance in chemistry, biology, and materials science

- Outperforms competitors across multiple benchmark tests including ChemBench (76.47) and ProteinLMBench (58.47)

- Features innovative "thinking mode" for enhanced interactivity and transparent reasoning

- Open-source availability accelerates research in multimodal AI applications