Hume AI Launches EVI 3: A Breakthrough in Emotion-Sensing Voice Technology

Hume AI has officially launched EVI 3, its third-generation voice interaction model that sets new standards for emotional intelligence in artificial intelligence. This advanced system can detect subtle emotional cues in human speech and tailor responses with unprecedented personalization.

Experience the technology firsthand: https://demo.hume.ai/

Emotional Intelligence Meets Voice Technology

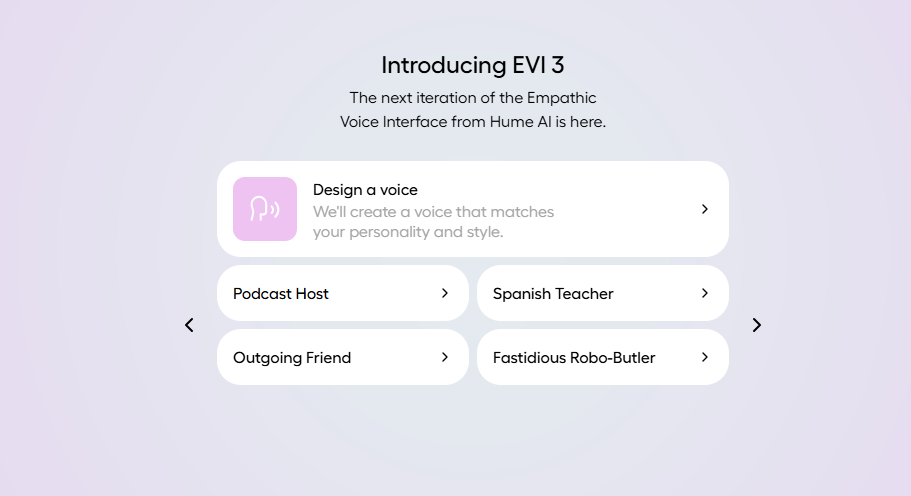

EVI 3 represents a significant leap forward in voice AI capabilities. Built on multimodal datasets, it combines speech transcription, reasoning, and synthesis into a seamless experience. The model generates unique voices and personalities in under a second based on simple text prompts, supporting more than 30 distinct vocal styles.

Imagine requesting an "old-school comedian" or "wise wizard" persona—EVI 3 not only delivers these characterizations with precision but adapts its delivery based on conversation context. This level of customization opens new possibilities for customer service interactions, virtual assistants, and creative content production.

Technical Superiority in Speed and Response

With inference latency as low as 300 milliseconds, EVI 3 outperforms OpenAI's GPT-4o and Google's Gemini in response speed. Blind tests with over 1,700 participants revealed EVI 3's advantage across seven key metrics including emotional expression and interruption handling.

The system's real-time processing capabilities are particularly impressive. While listening to user input, EVI 3 can simultaneously retrieve information from external sources and incorporate relevant data into its responses. This end-to-end processing creates remarkably fluid conversations that feel genuinely human.

Understanding the Human Touch

What truly sets EVI 3 apart is its nuanced emotional recognition. By analyzing vocal pitch, rhythm, and tone, the AI detects subtle emotional states and adjusts its responses accordingly. The system even replicates natural speech patterns like thoughtful pauses or conversational fillers ("umm"), creating interactions that feel authentic rather than robotic.

Hume AI trained EVI 3 using reinforcement learning on over 100,000 voice samples. This extensive training allows the model to capture the rich complexity of human communication—something most voice assistants struggle to achieve.

Practical Applications Across Industries

The technology is currently available through Hume AI's iOS app and demo platform, with API access coming soon for developers. Potential uses span multiple sectors:

- Customer service: Agents that adapt tone based on caller emotions

- Healthcare: Empathetic coaching tools for mental wellness

- Entertainment: Dynamic character voices for games and audiobooks

- Education: Personalized language learning companions

The company plans to expand language support to include French, German, Italian, and Spanish later this year.

The Future of Emotional AI

Founded by former DeepMind researcher Alan Cowen in 2021, Hume AI specializes in emotion-centric artificial intelligence. EVI 3 represents a major milestone toward their vision of making voice interaction the primary interface between humans and machines.

While tech giants focus on general intelligence improvements, Hume AI prioritizes emotional resonance in human-AI communication. Their approach democratizes voice customization—users can create distinctive AI personalities without technical expertise.

The introduction of EVI 3 signals a shift from functional voice assistants to truly understanding digital companions. As this technology evolves, it may fundamentally change how we interact with machines in our daily lives.

Key Points

- EVI 3 generates personalized voices and emotional responses faster than leading competitors

- The model demonstrates superior performance across seven key metrics including naturalness and interruption handling

- Advanced emotion detection allows for nuanced, human-like conversations

- Applications range from customer service to creative content production

- Hume AI plans multilingual expansion later this year