Hugging Face Launches SmolLM3: A Compact AI Powerhouse

Hugging Face Unveils Next-Gen Compact AI Model SmolLM3

July 9, 2025 - In a significant advancement for efficient AI systems, Hugging Face has officially released SmolLM3, its latest open-source language model featuring groundbreaking capabilities in a compact 3-billion parameter package.

Performance Beyond Its Size

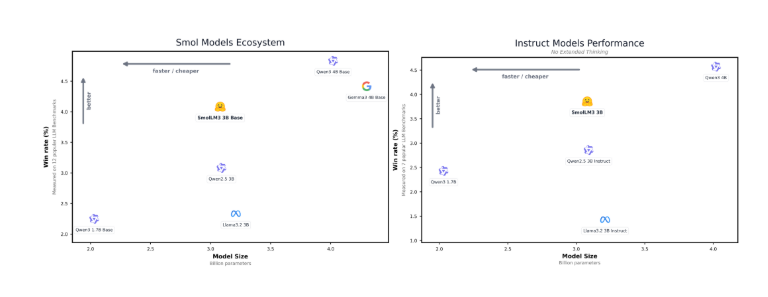

The new model demonstrates superior performance compared to similar-sized open-source alternatives like Llama-3.2-3B and Qwen2.5-3B, while supporting an impressive 128K token context window. This extended memory capacity allows for more coherent long-form text processing across multiple languages including English, French, Spanish, and German.

Innovative Dual-Mode Architecture

SmolLM3 introduces a novel dual reasoning system:

- Deep Thinking Mode: For complex analytical tasks requiring intensive computation

- Standard Mode: For faster responses when depth isn't critical

This flexible architecture enables users to optimize performance based on specific application requirements.

Open Development Approach

In keeping with Hugging Face's commitment to open AI development, the company has released:

- Full architectural specifications

- Data mixing methodologies

- Detailed training processes

The model employs an advanced transformer decoder architecture, building upon SmolLM2's design while incorporating key Llama improvements. Technical enhancements include:

- Group query attention mechanisms

- Document-level masking techniques

- Optimized long-context training protocols

Training Process & Specifications

The model was trained over 24 days using distributed computing with the following configuration: | Parameter | Value | |-----------|-------| | Layers | 36 | | Optimizer | AdamW | | Parameters | 3.08B |

The three-phase training regimen strategically combined:

- General capability building with web, math, and code data

- Enhanced quality focus with specialized math/code datasets

- Advanced sampling for reasoning optimization

Availability & Future Potential

The base model and instruction-tuned variants are now available on Hugging Face's platform:

Industry analysts predict this release will accelerate development of efficient AI applications across sectors from education to enterprise solutions.

Key Points:

- Compact Power: 3B parameters with performance surpassing larger models

- Extended Context: 128K token processing capacity

- Dual Modes: Switchable reasoning approaches for different needs

- Full Transparency: Open architecture promotes community innovation

- Multilingual Support: Fluent in major European languages