Hong Kong PolyU and OPPO Open-Source DLoRAL for Video HD Breakthrough

Hong Kong PolyU and OPPO Revolutionize Video Super-Resolution with DLoRAL

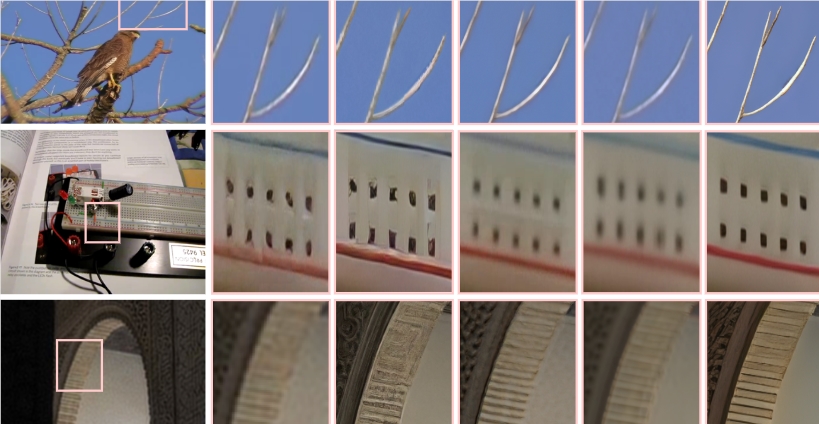

With AI-driven image upscaling becoming commonplace, the challenge of achieving high-definition video while maintaining frame consistency has remained unresolved—until now. The Hong Kong Polytechnic University and OPPO Research Institute have collaboratively developed DLoRAL (Dual LoRA Learning), an open-source framework that sets a new benchmark for RealVSR (Real Video Super-Resolution).

Dual LoRA Architecture: A Technical Marvel

At its core, DLoRAL leverages a pre-trained diffusion model (Stable Diffusion V2.1) enhanced by two specialized LoRA modules:

- CLoRA: Ensures temporal consistency across frames, eliminating flickering or jumps.

- DLoRA: Focuses on spatial detail enhancement, sharpening visuals to HD quality.

This dual-module design decouples temporal and spatial optimization, embedding lightweight components into the diffusion model to reduce computational overhead.

Two-Stage Training: Precision and Efficiency

The framework employs a novel two-stage training strategy:

- Consistency Stage: Optimizes frame coherence using CLoRA and CrossFrame Retrieval (CFR).

- Enhancement Stage: Freezes CLoRA/CFR to refine DLoRA via classifier score distillation (CSD) for crisper details.

The result? A 10x speed boost over traditional multi-step methods during inference.

Open-Source Impact

Released on GitHub on June 24, 2025, DLoRAL includes code, training data, and pre-trained models. Early tests show superior performance in metrics like PSNR and LPIPS, though minor limitations persist in restoring fine text due to Stable Diffusion’s 8x downsampled VAE.

Key Points

- Innovation: Dual LoRA architecture balances temporal/spatial enhancements.

- Speed: Processes videos 10x faster than conventional RealVSR methods.

- Accessibility: Fully open-sourced to accelerate academic/industrial adoption.

- Limitations: Small text restoration requires future refinement.