Google Unveils BlenderFusion for Advanced 3D Editing

Google Launches BlenderFusion: A Game-Changer for 3D Visual Editing

Google has unveiled BlenderFusion, a cutting-edge framework aimed at revolutionizing 3D visual editing and generation synthesis. This innovative tool addresses the growing demand for precise control in image generation, particularly in handling complex scenes where traditional methods like generative adversarial networks (GANs) and diffusion models often fall short.

How BlenderFusion Works

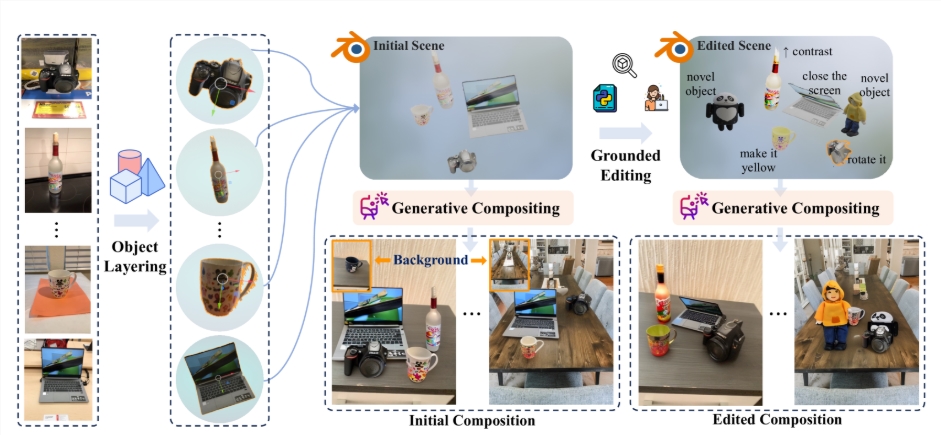

The framework operates through a streamlined three-stage workflow:

Layering: BlenderFusion extracts editable 3D objects from 2D input images using advanced vision models like SAM2 and DepthPro. These models generate accurate 3D point clouds, setting the stage for subsequent edits.

Editing: Users can manipulate imported 3D entities with tools like Blender, performing operations such as moving, rotating, scaling, and fine-tuning object appearances and materials. This stage ensures flexibility and precision in scene composition.

- Compositing: The edited 3D scene is merged with the background using a powerful generative compositor. Google optimized existing diffusion models to seamlessly integrate edits with source scenes, delivering high-quality, coherent results.

Implications for Creators

BlenderFusion empowers designers and creators by simplifying complex visual tasks. Its intuitive workflow reduces the technical barriers often associated with 3D editing, making it accessible to a broader audience. The framework’s ability to handle intricate scenes with precision opens new possibilities for industries like gaming, film production, and virtual reality.

The project is available at blenderfusion.github.io.

Key Points:

- 🌟 Integration of 3D tools: Combines advanced editing with diffusion models for efficient scene manipulation.

- 🛠️ Three-stage workflow: Layering, editing, and compositing streamline the creative process.

- 📈 Enhanced precision: Optimized models improve handling of complex visual elements.