Google Photos Introduces AI Image Verification Tool

Google Photos Rolls Out Feature to Detect AI-Generated Images

As artificial intelligence becomes increasingly sophisticated, distinguishing authentic photos from manipulated or AI-generated content has grown more challenging. In response to these concerns, Google Photos is introducing a groundbreaking feature called "How Was This Made" designed to provide users with clarity about image origins.

Addressing the Deepfake Dilemma

The rapid advancement of AI image generation tools has created a landscape where manipulated photos and videos can spread misinformation with alarming ease. Deepfake technology, while impressive, poses significant risks for fraud and digital manipulation. Google's new feature aims to combat these challenges by bringing unprecedented transparency to digital media.

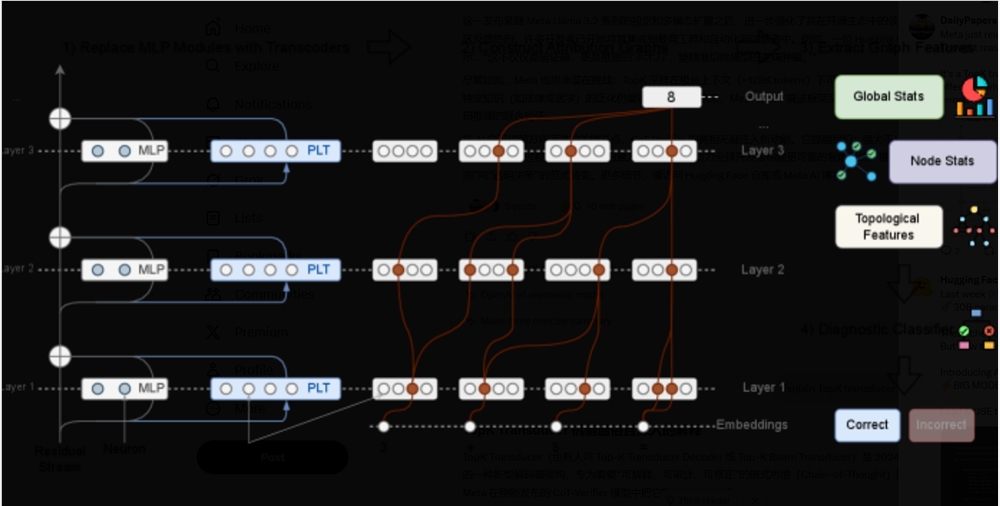

How the Feature Works

Embedded in the Google Photos 7.41 APK, the tool will display creation details in the media information section. Using Content Credentials - an emerging industry standard - the system embeds editing history directly into image metadata. This allows users to see at a glance whether content was:

- Naturally captured by a camera

- Edited using software tools

- Completely generated by AI algorithms

The system will also flag media with missing or altered metadata, providing additional safeguards against manipulated content.

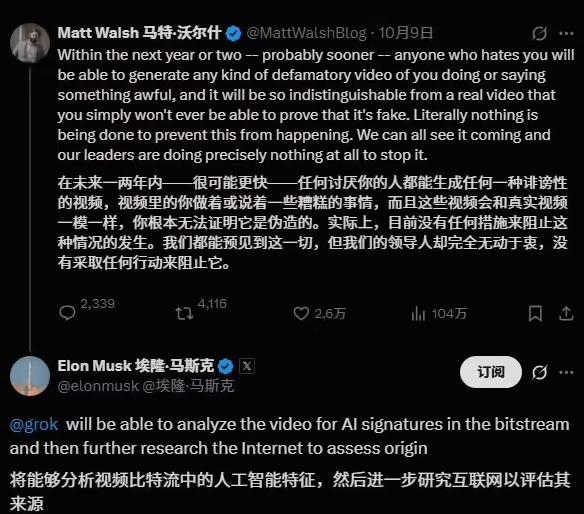

Industry-Wide Implications

This development comes as major tech companies grapple with the ethical implications of AI-generated media. Google's approach mirrors similar initiatives from Adobe, Nikon, and Leica, suggesting a growing industry consensus on the need for digital transparency standards.

The timing is particularly relevant as Google's own Magic Eraser and Reimagine tools demonstrate how easily images can be significantly altered with AI assistance. Without proper disclosure, such modifications could be used to mislead viewers.

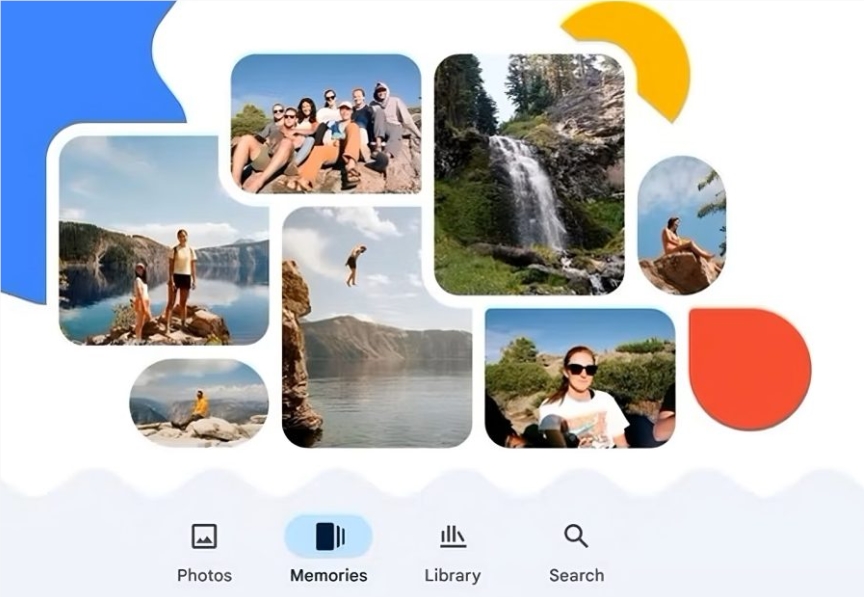

Building Trust in Digital Media

A Google spokesperson emphasized that the feature represents more than just technical innovation: "We're addressing a fundamental trust gap between users and AI technology. In today's media landscape, people deserve to know whether what they're seeing reflects reality or artificial creation."

The company hopes this transparency initiative will:

- Empower users to make informed judgments about visual content

- Discourage malicious use of image manipulation tools

- Establish best practices for responsible AI development

Future Challenges and Adoption

While promising, the success of this feature depends on widespread adoption across platforms and devices. Industry analysts note that without universal standards, determined bad actors may still find ways to circumvent detection systems.

The feature is expected to roll out globally in coming months as part of regular Google Photos updates.

Key Points:

- 🔍 New "How Was This Made" feature reveals image origins in Google Photos

- 🛡️ Uses Content Credentials metadata standard for editing history

- 🤖 Clearly labels AI-generated versus authentic content

- 🌐 Part of broader industry push for digital transparency

- ⚠️ Flags images with missing or suspicious metadata