Google Introduces LMEval to Standardize AI Model Evaluations

Google has unveiled LMEval, a groundbreaking open-source framework aimed at simplifying and standardizing the evaluation process for large language and multimodal AI models. This innovative tool promises to revolutionize how researchers and developers assess cutting-edge AI systems from various providers.

For years, comparing different AI models proved challenging due to inconsistent evaluation methods. Each company used proprietary APIs, unique data formats, and customized benchmark settings. This fragmentation made objective comparisons difficult and often required significant effort to adapt tests across platforms. LMEval eliminates these hurdles by establishing a unified evaluation standard.

The framework's versatility stands out—it supports not just text analysis but also extends to image interpretation and code generation assessments. Google emphasizes the system's flexibility: users can easily incorporate new input formats as needed. The platform handles diverse evaluation types ranging from true/false questions to complex free-text generation tasks.

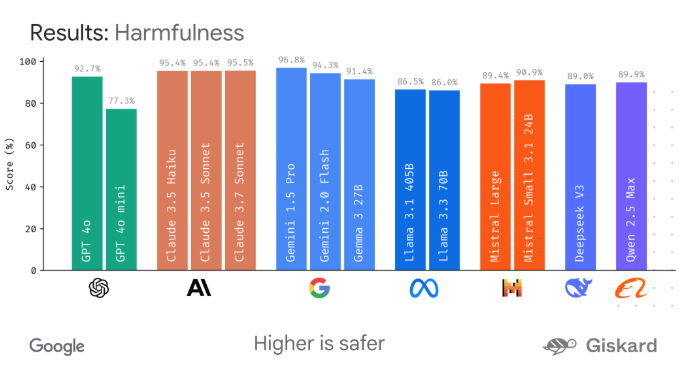

One particularly valuable feature is LMEval's ability to detect "evasive strategies"—instances where models deliberately provide vague responses to avoid controversial or risky content. This capability gives researchers deeper insight into model behavior beyond simple accuracy metrics.

Built on the LiteLLM framework, LMEval seamlessly integrates with major AI providers including Google's own systems, OpenAI, Anthropic, Ollama, and Hugging Face. The platform's architecture smooths over API differences between these services, allowing identical tests to run across multiple platforms without code modifications.

Efficiency takes center stage in LMEval's design. The system employs incremental evaluation—users can run new tests without repeating entire benchmark suites—and leverages multithreaded processing for faster computations. These features dramatically reduce both time requirements and computational costs during evaluations.

Complementing the core framework is LMEvalboard, Google's visualization tool for analyzing test results. This component generates intuitive radar charts that display model performance across various categories while allowing detailed examination of individual question responses. Side-by-side comparisons make differences between models immediately apparent.

The entire project is available as open-source software on GitHub, complete with example notebooks to help developers get started quickly.

Key Points

- Google's LMEval provides standardized evaluation for diverse AI models including GPT-4o and Gemini2.0Flash

- Supports multimodal assessments (text, images, code) with flexible input format additions

- Detects model evasion tactics through sophisticated response analysis

- Runs efficiently via incremental testing and parallel processing capabilities

- Includes visualization tools for comprehensive performance comparisons