Google DeepMind Unveils InfAlign Framework for Language Models

Google DeepMind Unveils InfAlign Framework for Language Models

Google DeepMind has introduced InfAlign, a new machine learning framework designed to enhance the alignment capabilities of generative language models during the inference phase. This innovative framework addresses significant challenges faced by language models when transitioning from training to practical application, particularly regarding performance optimization during inference.

Challenges in Generative Language Models

Generative language models often encounter hurdles in achieving optimal performance after training. One key issue lies in the inference phase, where models must produce reliable outputs. Conventional methods, such as reinforcement learning from human feedback (RLHF), primarily focus on improving overall success rates. However, they often overlook critical decoding strategies, including Best-of-N sampling and controlled decoding techniques. This disconnect between training objectives and actual deployment can result in inefficiencies, negatively impacting the quality of generated outputs.

Introducing InfAlign

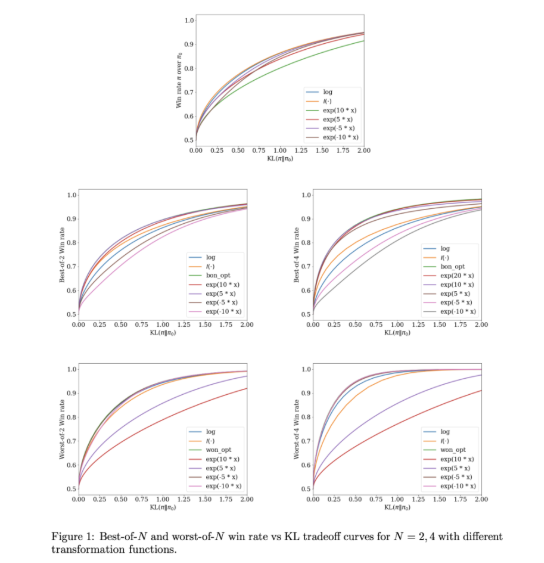

To tackle these challenges, Google DeepMind, in collaboration with Google Research, has developed InfAlign. This framework integrates inference strategies into the alignment process, aiming to bridge the gap between training and application. InfAlign modifies reward functions based on specific inference strategies through a calibrated reinforcement learning approach. This is particularly beneficial for techniques such as Best-of-N sampling, which generates multiple responses to select the best one, and Worst-of-N sampling, commonly used for safety evaluations. By doing so, InfAlign ensures that aligned models perform effectively in both controlled environments and real-world scenarios.

The CTRL Algorithm

At the heart of InfAlign lies the Calibrated and Transformed Reinforcement Learning (CTRL) algorithm. This algorithm operates in three key steps:

- Calibrating reward scores

- Transforming these scores according to the chosen inference strategy

- Solving a KL regularization optimization problem By tailoring reward transformations to specific scenarios, InfAlign successfully aligns training goals with inference requirements. This approach not only enhances the success rate during inference but also ensures computational efficiency. Additionally, InfAlign improves the robustness of language models, enabling them to handle various decoding strategies and deliver consistently high-quality outputs.

Experimental Validation

InfAlign's effectiveness has been validated through experiments utilizing datasets from Anthropic, focusing on usefulness and harmlessness. Results demonstrate that InfAlign significantly improves the inference success rate in Best-of-N sampling by 8%-12% and in Worst-of-N safety evaluations by 4%-9%. These enhancements are attributed to calibrated reward transformations that effectively address miscalibration issues in reward models, ensuring consistent performance across diverse inference scenarios.

Conclusion

InfAlign marks a significant advancement in the alignment of generative language models. By integrating inference-aware strategies, the framework addresses critical disparities between training and deployment phases. Its solid theoretical underpinnings and empirical results underscore its potential to comprehensively enhance the alignment of AI systems.

For further information, visit InfAlign on Arxiv.

Key Points

- InfAlign is a new framework developed by Google DeepMind aimed at enhancing the performance of language models during the inference phase.

- This framework aligns training objectives with inference needs by adjusting reward functions for inference strategies through calibrated reinforcement learning methods.

- Experimental results indicate that InfAlign significantly improves the inference success rate of models across multiple tasks, demonstrating good adaptability and reliability.