DeepSeek AI Model Makes Nature Cover, First LLM to Pass Peer Review

DeepSeek R1 AI Model Achieves Historic Milestone with Nature Publication

In a landmark moment for artificial intelligence research, DeepSeek's paper on its R1 large language model (LLM) has been featured on the cover of Nature, becoming the first AI model research to pass the journal's rigorous peer-review process. The September 18 publication represents a significant validation of AI research methodologies in mainstream science.

A New Standard for AI Research Transparency

The Nature editorial board emphasized that DeepSeek's approach offers a crucial framework for an industry often criticized for hype and unverified claims. By subjecting its work to independent academic scrutiny, DeepSeek has demonstrated how peer review can improve reproducibility and reduce potential social risks from premature deployment of unvetted AI technologies.

"In an era of rapid but sometimes questionable AI advancements," noted the editors, "this rigorous validation process should serve as a model for responsible development."

Breakthrough in Autonomous Reasoning

The published research details DeepSeek R1's innovative training methodology that differs fundamentally from conventional approaches:

- Eliminates dependency on human-annotated training data

- Uses reinforcement learning (RL) for autonomous skill development

- Achieved 71% accuracy in AIME2024 math competition (up from 15.6%)

- Matches performance levels of leading models like OpenAI's systems

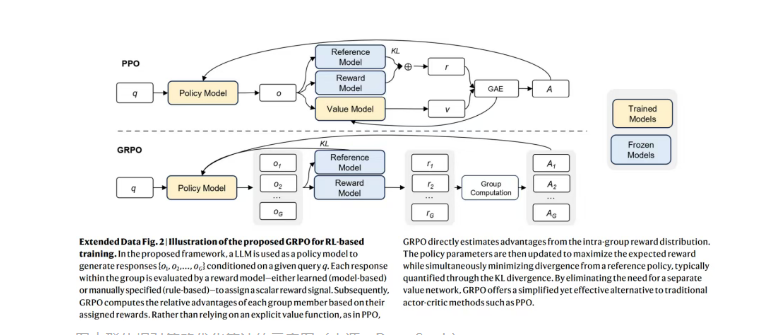

The model's success stems from its Group Relative Policy Optimization algorithm, which enables more sophisticated reasoning capabilities without direct human supervision.

Schematic diagram of the group relative policy optimization algorithm (source: DeepSeek)

Schematic diagram of the group relative policy optimization algorithm (source: DeepSeek)

Rigorous Review Process Yields Improvements

The peer-review process involved eight domain experts who provided critical feedback over several months. This led to multiple revisions addressing:

- Technical implementation details

- Readability challenges

- Language mixing limitations

The team responded by developing a multi-stage training framework combining rejection sampling with supervised fine-tuning, significantly improving the model's writing capabilities.

Implications for Future AI Development

This publication establishes several important precedents:

- Sets benchmark for scientific rigor in LLM research

- Demonstrates viability of peer-reviewed AI development

- Provides template for responsible innovation practices

- Encourages transparency across the industry

The achievement comes as global regulators increasingly focus on establishing standards for trustworthy AI systems.

Key Points:

- First peer-reviewed LLM research published in Nature

- Novel autonomous training approach outperforms traditional methods

- Process improved model capabilities through academic scrutiny

- Establishes new standard for transparent AI development