DeepMind Unveils On-Device AI for Next-Gen Robotics

DeepMind's Gemini Robotics On-Device: A Leap in Autonomous AI

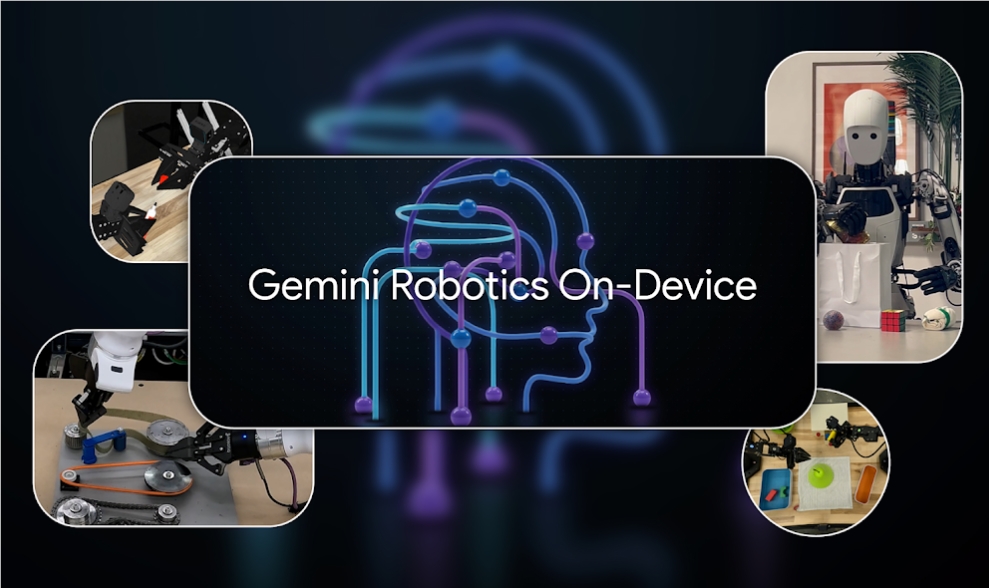

Google DeepMind has officially introduced Gemini Robotics On-Device, marking a significant advancement in robotic artificial intelligence. This next-generation model operates entirely on local hardware, eliminating dependence on cloud computing while delivering robust performance across industrial, warehouse, and domestic applications.

Cloud-Free Operation: Redefining Robotic Independence

The VLA (Vision-Language-Action) model builds upon Google's Gemini 2.0 architecture, with its defining capability being complete offline functionality. This addresses critical limitations of traditional cloud-dependent systems, particularly regarding latency and reliability in environments with unstable network conditions.

"This compact yet powerful model runs directly on robot hardware," explained Carolina Parada, Senior Director at DeepMind. "It guarantees stable performance with minimal latency while operating completely offline - a game-changer for field applications."

Benchmark tests reveal the system performs comparably to its cloud-based counterpart while outperforming other local AI models. This makes it particularly valuable for operations in network-restricted areas like factories, warehouses, or remote locations.

Rapid Task Adaptation: Learning From Minimal Demonstrations

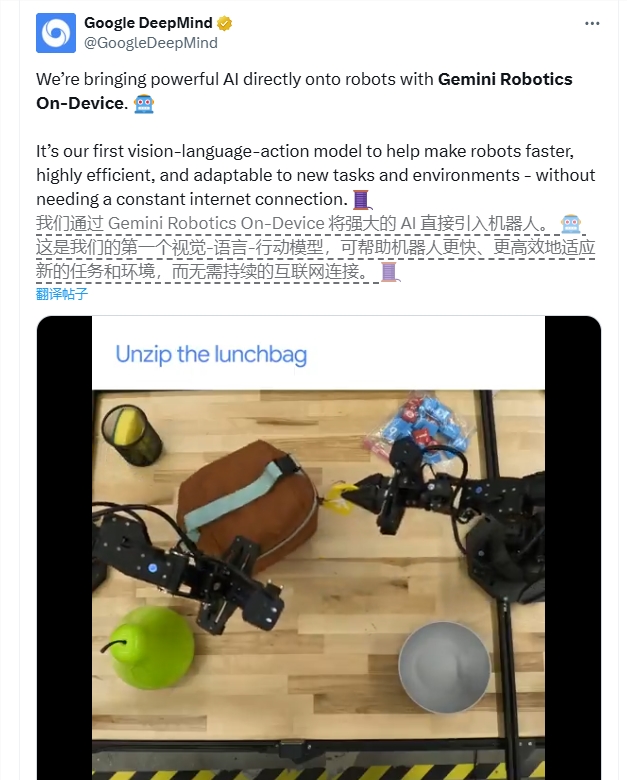

The model demonstrates exceptional versatility, requiring only 50-100 demonstrations to master new tasks such as:

- Precision garment folding

- Industrial assembly procedures

- Complex zipper manipulation

Originally developed for the ALOHA robot platform, Gemini Robotics On-Device has successfully adapted to diverse hardware including the dual-arm Franka FR3 and Apptronik's Apollo humanoid robot. Developers can fine-tune operations through natural language commands, enabling sophisticated dual-arm coordination and dynamic environment navigation.

"Generative AI allows our robots to generalize from limited data samples," Parada noted. "This dramatically reduces deployment timelines in complex operational scenarios."

Developer Ecosystem Expansion: SDK Release Accelerates Innovation

Accompanying the launch is a comprehensive Software Development Kit, currently available through DeepMind's GitHub "Trusted Tester" program. The SDK enables:

- Model testing in MuJoCo physical simulators

- Real-world environment fine-tuning

- Custom task training with minimal demonstrations

The toolkit represents DeepMind's first open access to VLA model customization capabilities, significantly lowering barriers to specialized robotic applications. Early testing shows promising results in novel scenarios like industrial conveyor operations and delicate object handling.

Safety and Industry Implications

The development team emphasizes robust safety protocols developed in collaboration with policy experts. The launch intensifies competition in general-purpose robotic AI, positioning Google against initiatives like Nvidia GR00T and OpenAI RT-2.

The technology's combination of offline operation and rapid learning could revolutionize automation economics across sectors from logistics to domestic services.

Key Points:

- First fully local VLA model matching cloud performance

- Adapts to new tasks with 50-100 demonstrations

- Cross-platform compatibility demonstrated

- SDK enables customized applications

- Designed for safety-critical environments