DeepEyesV2: How This Compact AI Outsmarts Bigger Models

DeepEyesV2: The Small AI That Thinks Big

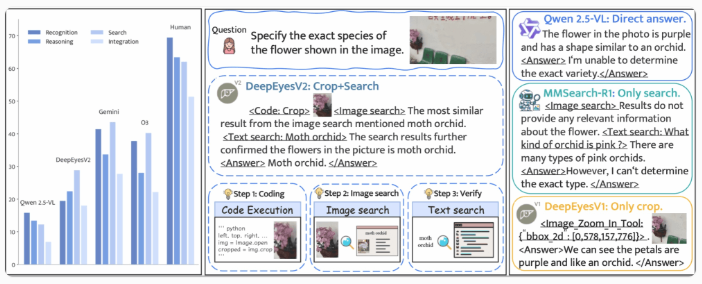

Move over, heavyweight models - there's a new contender in town that proves size isn't everything. Chinese researchers have developed DeepEyesV2, a multimodal AI that uses clever tool integration to outperform larger competitors.

Smarter, Not Harder

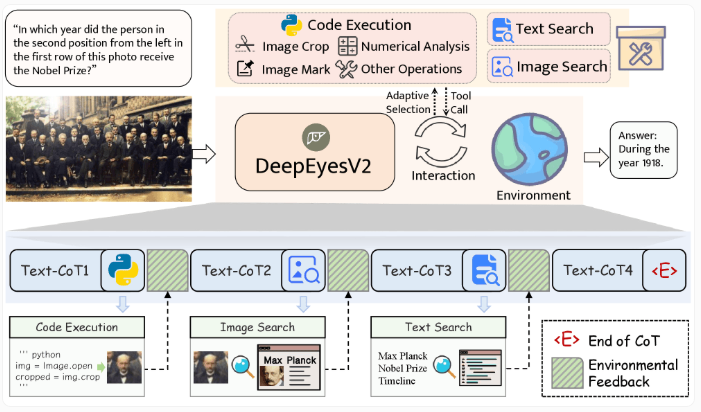

Unlike traditional models relying solely on pre-trained knowledge, DeepEyesV2 acts more like a resourceful human researcher. When faced with an image analysis task, it might:

- Write Python code to process visual data

- Search for similar images online

- Look up contextual information missing from the picture itself

The breakthrough came after early struggles. "Initially, our model kept writing buggy code or skipping tools altogether," explains the research team. Their solution? A two-stage training approach that first teaches tool usage fundamentals before refining them through reinforcement learning.

Benchmark Busting Performance

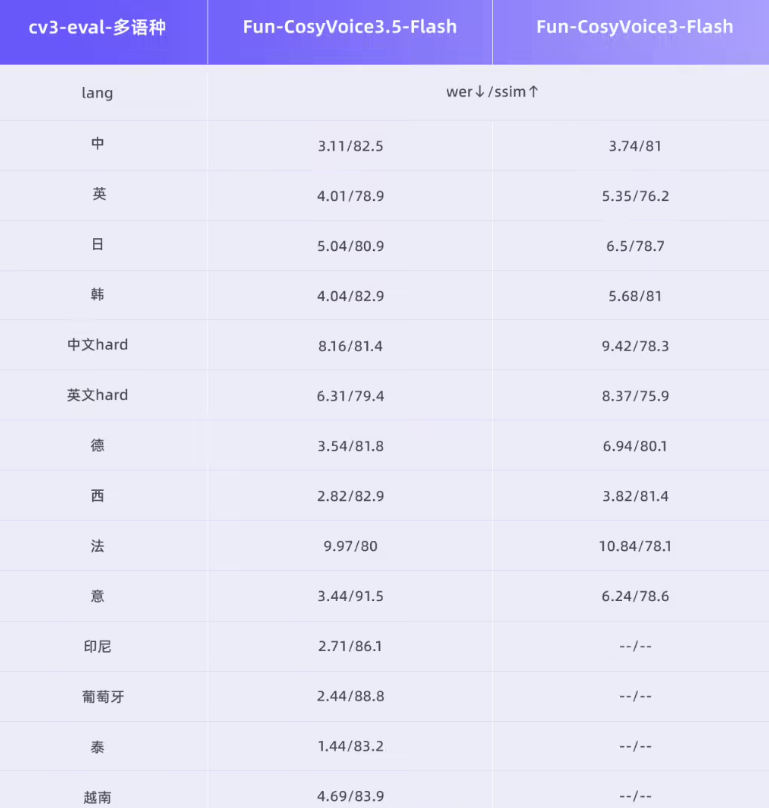

The numbers speak volumes:

- 52.7% accuracy in mathematical reasoning (versus human's 70%)

- 63.7% success rate in search-driven tasks

- Outperforms proprietary models costing millions to develop

What makes these results remarkable isn't just the percentages - it's how they're achieved. While competitors throw computational power at problems, DeepEyesV2 demonstrates thoughtful tool selection can compensate for smaller size.

Available Now for Developers

The research team has open-sourced DeepEyesV2 under the Apache License 2.0, making it freely available on:

The complete technical details are available in their research paper.

Key Points:

🔍 Tool mastery beats raw power - Smaller models can compete by intelligently leveraging external resources 💡 Two-phase training - Combines foundational learning with behavioral refinement 📊 Proven performance - Consistently outperforms larger models across multiple benchmarks