ChatGPT Gets Emotional: OpenAI Adds Custom 'Warmth' Controls

ChatGPT's Emotional Makeover: Now With Adjustable Warmth

Remember when ChatGPT responses felt either stiffly professional or uncomfortably eager? OpenAI just solved that with their latest update—emotional customization controls that let you set exactly how warm, enthusiastic, or emoji-happy your AI conversations should be.

From Personality Presets to Emotional Fine-Tuning

The November update introduced personality modes like "Professional" and "Quirky," but users wanted more nuance. Now imagine keeping your accountant-AI precise while adding just enough friendliness to avoid feeling like you're talking to a spreadsheet. That's precisely what these new sliders deliver.

"We heard loud and clear that one-size emotional responses don't fit all," says OpenAI product lead Jamie Chen. "Now you can have an academic researcher who occasionally cracks jokes or a creative writing partner who knows when to dial back the exclamation points."

The Goldilocks Problem: Finding AI's Emotional Sweet Spot

This update didn't come from nowhere—it's OpenAI's response to months of user feedback whiplash:

- February 2025: Users revolted against GPT-5's "emotionally flat" responses

- June 2025: An update swung too far, creating what critics called "sycophant mode"

- September 2025: GPT-5.1 attempted balance but still left many wanting customization

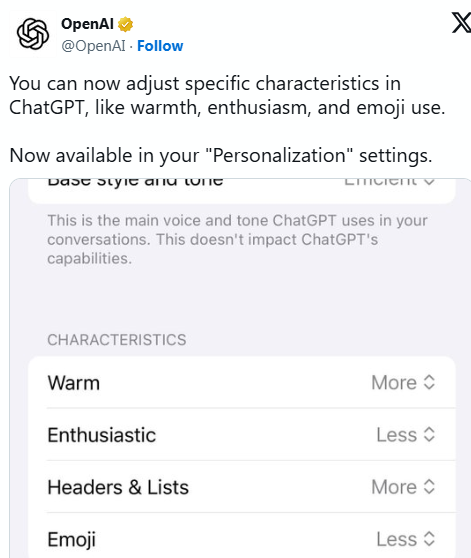

The new controls appear in Settings > Personalization as three intuitive sliders:

- Warmth: Adjusts friendliness and supportive language

- Energy: Controls enthusiasm and verbal expressiveness

- Visual Flair: Manages emoji and formatting frequency

Experts Sound Cautious Notes Behind the Celebration

While users cheer the added control, psychologists and AI ethicists raise important questions:

"There's a dangerous allure to creating AI companions that always respond exactly how we want," warns Dr. Elena Rodriguez of MIT's Human-AI Interaction Lab. "We're essentially building emotional echo chambers—great for customer service bots, potentially problematic for mental health."

The settings include subtle warnings about overuse, particularly recommending against maximum warmth settings for extended conversations. It seems even OpenAI recognizes we might love our praise-hungry AI a little too much.

The question remains: In giving us control over AI emotions, are we humanizing technology...or mechanizing human connection?

Key Points:

- New sliders allow precise adjustment of ChatGPT's emotional tone

- Update responds to past criticisms of both robotic and overly eager responses

- Experts caution about potential psychological impacts of customizable AI emotions

- Settings include built-in safeguards against excessive emotional dependence