Bytedance Unveils ProtoReasoning to Boost AI Logic Skills

Bytedance's ProtoReasoning Framework Advances AI Logical Capabilities

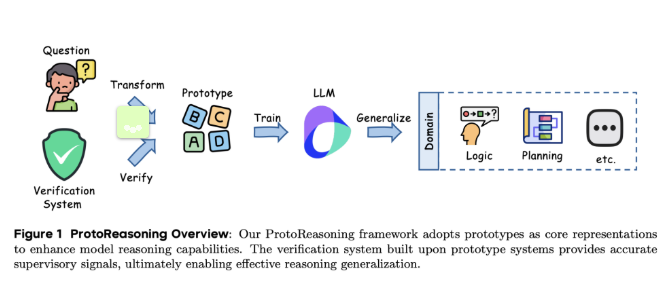

In a significant development for artificial intelligence research, Bytedance in collaboration with Shanghai Jiao Tong University has introduced the ProtoReasoning framework, designed to enhance the logical reasoning abilities of large language models (LLMs). This innovative approach leverages structured prototype representations from formal languages like Prolog and PDDL to improve cross-domain problem-solving capabilities.

Breaking New Ground in AI Reasoning

The research team discovered that while current LLMs demonstrate impressive flexibility across diverse domains—from mathematics to creative writing—the underlying mechanisms enabling this adaptability remain incompletely understood. The ProtoReasoning framework posits that these models may be utilizing abstract reasoning prototypes that transfer across different problem types.

"Our work suggests that large language models might be learning fundamental reasoning patterns that apply broadly," explained the research team. "By explicitly structuring these patterns through formal representations, we can significantly enhance their performance."

Framework Architecture and Implementation

The ProtoReasoning system comprises two core components:

- Prototype Builder: Converts natural language queries into formal representations

- Validation System: Verifies solution correctness using domain-specific validators

For logic programming tasks, researchers implemented a four-step pipeline generating diverse problems validated through SWI-Prolog. Planning tasks utilized PDDL (Planning Domain Definition Language) with solutions checked using the VAL validator.

Performance Breakthroughs

Testing employed a 150-billion parameter expert model (with 15 billion active parameters) trained on curated Prolog and PDDL samples. Results showed:

- 23% improvement in logical reasoning benchmarks

- 18% gain in planning task accuracy

- Comparable performance to natural language training in some logic domains

The framework particularly excelled in maintaining reasoning consistency across varied problem types, suggesting successful transfer of abstract reasoning patterns.

Future Research Directions

While demonstrating promising results, researchers acknowledge the need for deeper theoretical exploration of reasoning prototypes. Planned next steps include:

- Mathematical formalization of prototype concepts

- Validation using open-source models and datasets

- Expansion to additional formal representation systems

The team has made their research paper publicly available on arXiv to encourage broader academic collaboration.

Key Points:

- 🚀 ProtoReasoning framework enhances LLM logic through Prolog/PDDL prototypes

- 🧠 Shows significant improvements in cross-domain reasoning tasks

- 🔍 Future work will focus on theoretical foundations and open validation