ByteDance's POLARIS Boosts Small AI Models to Rival Large Ones

ByteDance's POLARIS: A Breakthrough in Small Model Performance

In a significant advancement for artificial intelligence, ByteDance's Seed team has partnered with researchers from the University of Hong Kong and Fudan University to develop POLARIS, an innovative reinforcement learning training method that dramatically improves the capabilities of small AI models. The team has open-sourced the complete project, including training methods, data, code, and experimental models.

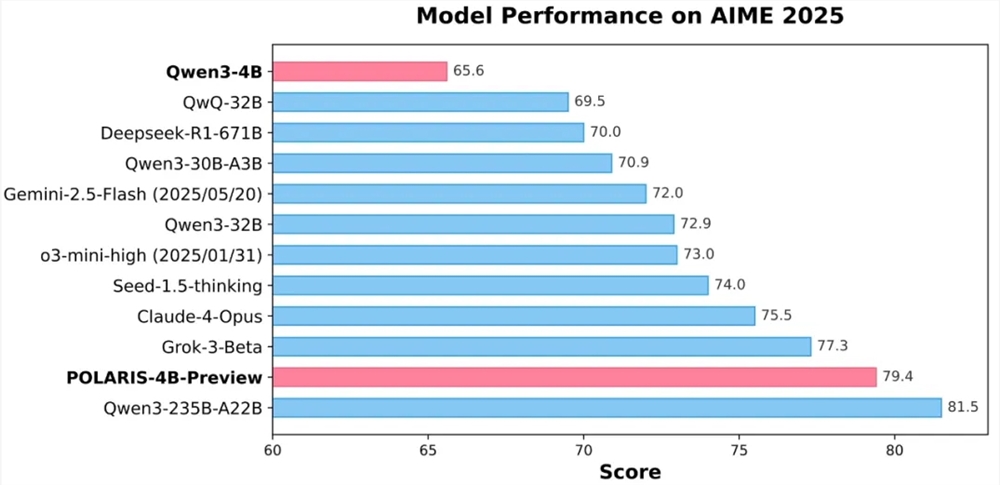

Closing the Gap Between Small and Large Models

The research demonstrates that POLARIS-trained models can achieve performance levels comparable to much larger counterparts. Most notably, the 4 billion parameter Qwen3-4B model achieved impressive accuracy rates of 79.4% on AIME25 and 81.2% on AIME24 mathematical reasoning tests - surpassing some closed-source models with significantly more parameters.

Technical Innovations Behind POLARIS

The breakthrough stems from several key innovations:

- Customized Training Strategy: The team developed a specialized approach where training data and hyperparameters are tailored specifically for each model being trained.

- Dynamic Data Adjustment: The system continuously updates training samples based on model performance, removing overly easy problems to maintain optimal challenge levels.

- Temperature Control: Researchers fine-tuned sampling temperature to balance performance and output diversity.

- Length Extrapolation: By modifying position encoding (RoPE), models can handle sequences longer than those encountered during training.

- Multi-stage RL Training: The method begins with shorter context windows before gradually increasing complexity as the model stabilizes.

Practical Advantages

The lightweight nature of POLARIS-trained models offers significant practical benefits:

- Can be deployed on consumer-grade graphics cards

- Lower computational requirements reduce costs and energy consumption

- Opens AI capabilities to organizations without access to supercomputing resources

The research team has made all components publicly available through GitHub and Hugging Face:

Key Points

- ByteDance's POLARIS method enhances small AI models to rival large ones in mathematical reasoning

- The 4B parameter Qwen3-4B model outperformed some closed-source giants on standardized tests

- Innovative techniques include dynamic data adjustment and length extrapolation

- Lightweight design enables deployment on consumer hardware

- Complete project open-sourced for community use and development