ByteDance's BAGEL: Open-Source AI Model Breaks New Ground in Multimodal Tasks

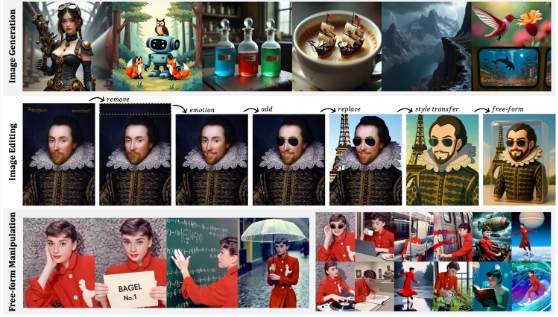

ByteDance has unveiled BAGEL, a groundbreaking open-source multimodal foundation model that pushes the boundaries of AI-powered text and image processing. With 7 billion active parameters (14 billion total), this new contender demonstrates remarkable performance across understanding, generation, and editing tasks.

Benchmark tests reveal BAGEL's superiority over leading open-source vision-language models including Qwen2.5-VL and InternVL-2.5. In text-to-image generation quality, it matches the output of professional-grade systems like Stable Diffusion 3, while surpassing them in complex image editing scenarios.

Architectural Innovation

At its core, BAGEL employs a novel Mixture of Transformers (MoT) design that maximizes multimodal learning. The system processes visual data through dual encoders - one capturing pixel-level details, another extracting semantic features. This approach follows an innovative "next token group prediction" training paradigm that simultaneously predicts language and visual tokens for efficient data compression.

Training and Capabilities

The model digested trillions of multimodal tokens from diverse sources - text, images, videos, and web data - during pretraining. This extensive diet enables remarkable abilities:

- Free-form image editing with contextual awareness

- Future frame prediction in video sequences

- Sophisticated 3D object manipulation

- Virtual world navigation simulations

Performance scales consistently with training duration. Early stages show strong understanding and generation skills, while advanced editing capabilities emerge later in the process.

Researchers discovered that combining Variational Autoencoders (VAEs) with Vision Transformers (ViTs) creates a synergy that dramatically boosts intelligent editing performance. This finding highlights the critical role of visual-semantic context in complex multimodal reasoning tasks.

The model is now available on Hugging Face, inviting developers to explore its potential applications across creative and analytical domains.

Key Points

- BAGEL represents a significant leap in open-source multimodal AI with 7B active parameters

- Outperforms competitors in both generation quality and advanced editing tasks

- Unique MoT architecture enables simultaneous processing of visual and textual data

- Demonstrates emerging capabilities like 3D manipulation as training progresses

- Research confirms VAEs+ViTs combination enhances complex reasoning abilities