BAAI Releases Video-XL-2: A Breakthrough in Ultra-Long Video Analysis

The Beijing Academy of Artificial Intelligence (BAAI), in collaboration with Shanghai Jiao Tong University, has unveiled Video-XL-2, a revolutionary open-source model designed to analyze and understand ultra-long video content. This breakthrough addresses one of AI's most challenging tasks - processing lengthy videos efficiently while maintaining accuracy.

Technical Architecture

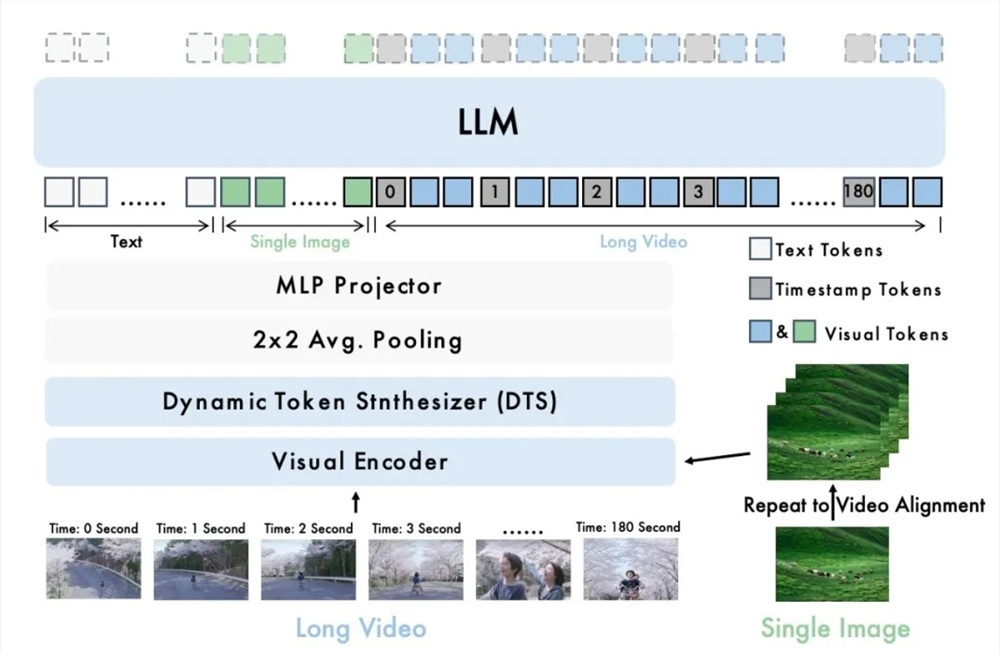

At its core, Video-XL-2 combines three innovative components:

- A visual encoder (SigLIP-SO400M) that processes video frames

- A Dynamic Token Synthesis module for feature compression and temporal analysis

- The Qwen2.5-Instruct language model for final reasoning and task completion

The system transforms visual data into text-compatible representations through sophisticated alignment techniques, enabling seamless multimodal understanding.

Performance and Efficiency

What sets Video-XL-2 apart is its remarkable efficiency:

- Processes 10,000-frame videos on a single GPU

- Completes 2048-frame prefilling in just 12 seconds

- Shows linear scalability as video length increases

The model achieves this through two key innovations:

- Chunk-based Prefilling: Divides videos into manageable segments for parallel processing

- Bi-granularity KV Decoding: Smartly allocates computing resources based on segment importance

Benchmark Dominance

In rigorous testing, Video-XL-2 established new standards:

- Outperformed all lightweight competitors on MLVU, VideoMME, and LVBench benchmarks

- Matched or exceeded the performance of massive 72B parameter models

- Set new records on the Charades-STA temporal grounding task

The model's practical applications span multiple industries from film analysis to security monitoring. Imagine AI that can instantly summarize feature films or detect anomalies in hours of surveillance footage - that's the potential Video-XL-2 unlocks.

Availability and Future Impact

The research team has made Video-XL-2 fully accessible to the public:

- Project Homepage: https://unabletousegit.github.io/video-xl2.github.io/

- Model Link: https://huggingface.co/BAAI/Video-XL-2

- Repository: https://github.com/VectorSpaceLab/Video-XL

As video content continues its explosive growth across platforms, tools like Video-XL-2 will become increasingly vital for extracting meaningful insights from this visual data deluge.

Key Points

- Video-XL-2 processes ultra-long videos up to 10,000 frames efficiently on consumer hardware

- The model combines visual encoding with advanced temporal analysis and language understanding

- Outperforms larger models while using significantly fewer resources

- Open-source availability accelerates development in video AI applications