Apple Open-Sources FastVLM and MobileCLIP2, Boosting AI on iPhones

Apple Open-Sources FastVLM and MobileCLIP2 with Major Speed Gains

Apple has quietly released two cutting-edge vision-language models (VLMs), FastVLM and MobileCLIP2, on the Hugging Face platform. These models are optimized for Apple Silicon devices and promise significant advancements in edge AI capabilities.

FastVLM: A Breakthrough in Speed and Efficiency

FastVLM is designed for high-resolution image processing, built on Apple's MLX framework. It achieves an 85-fold improvement in first token response time (TTFT) and reduces the visual encoder size by 3.4 times. Despite its compact 0.5B parameter scale, it matches the performance of larger models like LLaVA-OneVision.

The model's FastViT-HD hybrid visual encoder combines convolutional layers with Transformer modules, reducing visual tokens by 16 times compared to traditional ViT models. This optimization makes it ideal for mobile devices like iPhones, supporting fully localized processing without cloud dependency—aligning with Apple's privacy-first approach.

MobileCLIP2: Lightweight Multimodal Powerhouse

MobileCLIP2, a lightweight variant of the CLIP architecture, focuses on efficient image-text feature alignment. It retains CLIP's zero-shot learning capabilities while optimizing for edge devices. Paired with FastVLM, it enables real-time multimodal tasks like image search and smart assistant interactions.

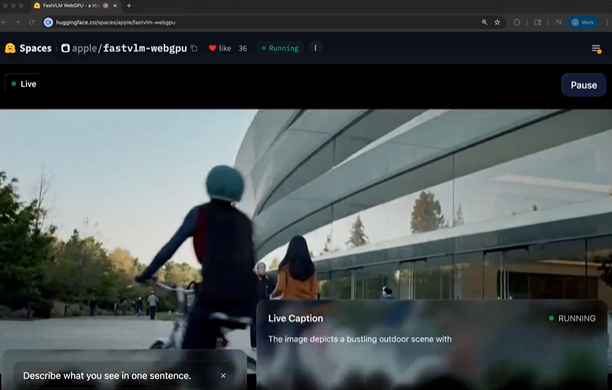

Real-Time Video Analysis in Browsers

A standout feature is the models' ability to perform real-time video scene description in browser environments (using WebGPU). They can analyze video content and generate text descriptions almost instantly—opening doors for AR glasses, accessibility tools, and more.

Apple's AI Ecosystem Ambitions

The open-sourcing of these models hints at Apple's broader strategy:

- Building auto agents for device-side task execution (e.g., screen analysis, data collection).

- Reducing cloud reliance while enhancing privacy.

- Expanding its edge AI ecosystem for wearables and other hardware.

Developer Resources

The models are fully open-sourced on Hugging Face (FastVLM), accompanied by iOS/macOS demo apps and a technical paper (arXiv).

Key Points:

- 85x faster first token response with FastVLM.

- Local processing ensures privacy compliance.

- Real-time video analysis in browsers via WebGPU.

- Open-source release empowers developers for edge AI apps.

- Part of Apple's push toward device-side AI ecosystems.