Anthropic Unveils Token Counting API for Enhanced Claude Models

Anthropic Unveils Token Counting API for Enhanced Claude Models

In the rapidly evolving field of artificial intelligence (AI), effective management of language models is essential for developers and data scientists. Recognizing this need, Anthropic has introduced a Token Counting API designed to enhance control over token usage across its Claude language models. This development seeks to improve interaction efficiency, ultimately benefiting the user experience.

The Importance of Token Management

Tokens are the fundamental units utilized in language models, encompassing letters, punctuation marks, or words that form the basis for generating responses. Proper management of these tokens is crucial as it directly influences multiple factors, such as cost efficiency, quality control, and the overall user experience. By effectively managing token usage, developers can reduce API call costs while ensuring that generated responses are more comprehensive.

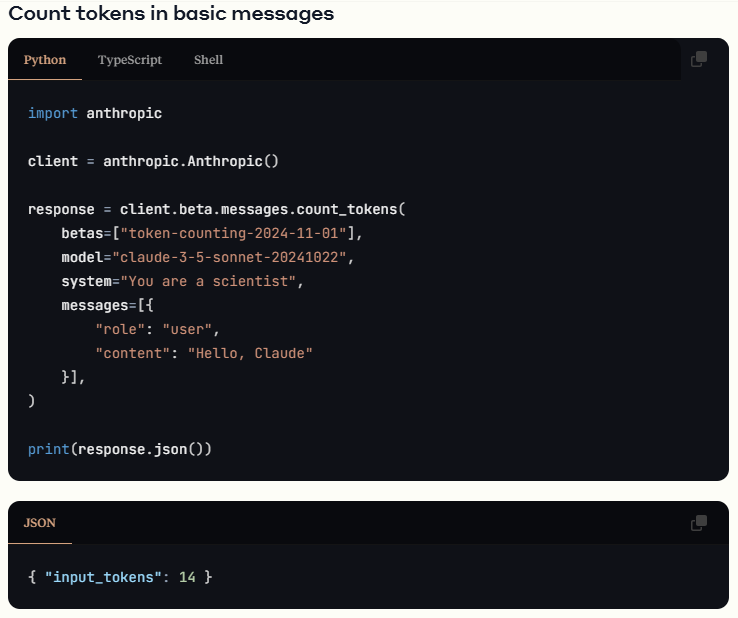

The newly launched Token Counting API allows developers to count tokens without needing to directly invoke any Claude model. This functionality enables the measurement of tokens in prompts and responses, consuming fewer computational resources. As a result, developers can pre-estimate token usage, making it easier to optimize their prompts before executing API calls.

Features and Benefits of the Token Counting API

Currently, the Token Counting API supports various Claude models, including Claude3.5Sonnet, Claude3.5Haiku, Claude3Haiku, and Claude3Opus. Developers can quickly obtain token counts with concise code using popular programming languages like Python and Typescript.

Key Features Include:

- Accurate Token Estimation: The API provides developers with precise estimates of token counts, enabling them to optimize their inputs and stay within token limits.

- Enhanced Token Optimization: By avoiding incomplete responses in complex application scenarios, the API contributes to improved response quality.

- Cost Control: The API's functionality allows developers to manage API call expenses effectively, making it particularly beneficial for startups and projects with budget constraints. The Token Counting API is poised to assist developers in creating more efficient customer support chatbots, precise document summarization tools, and interactive learning platforms. By providing accurate insights into token usage, Anthropic enhances developers' control over their models, leading to better adjustments in prompt content and reduced development costs.

Conclusion

The Token Counting API represents a significant advancement in the tools available to developers working with language models. By streamlining the process of managing token usage, Anthropic aims to save time and resources in a field that is continually advancing.

For more official details, please visit the Anthropic documentation.

Key Points

- The Token Counting API assists developers in accurately managing token usage, enhancing development efficiency.

- Understanding token usage effectively controls API call costs, making it suitable for cost-sensitive projects.

- The API supports multiple Claude models, allowing flexible use across various application scenarios.