Anthropic's Claude Neptune AI Enters Testing, Rivaling GPT-5 and Gemini

Artificial intelligence company Anthropic is preparing to launch its latest model, Claude Neptune, which has entered internal security testing. According to reports from AI media outlet testingcatalog, the model is positioned to compete with OpenAI’s GPT-5 and Google’s Gemini Ultra in the rapidly evolving AI landscape.

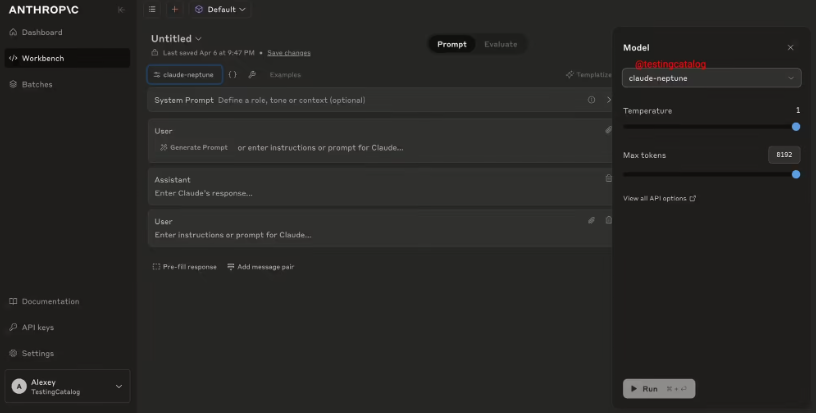

The safety evaluations for Claude Neptune are being conducted using Anthropic Workbench, focusing on resistance to jailbreak attacks through red team exercises. These tests, set to conclude on May 18, prioritize the model’s constitutional classifiers—a cornerstone of its security framework. Early results suggest Claude Neptune exhibits heightened sensitivity to security threats and improved defensive mechanisms compared to its predecessors.

Industry analysts predict an official release by late May or early June. Beyond competing with established models, Claude Neptune is expected to introduce advanced multimodal and agent functionalities, potentially reshaping market dynamics. Its design emphasizes user safety and privacy, addressing growing concerns about AI system vulnerabilities.

Anthropic’s approach reflects a broader trend toward secure, ethical AI development. As demand for sophisticated AI solutions grows, Claude Neptune could set new benchmarks for reliability in complex applications—from healthcare diagnostics to financial analysis.

Will this be the model that finally balances cutting-edge performance with robust safeguards? The tech world is watching closely.

Key Points

- Claude Neptune is in final security testing, targeting jailbreak resistance and safety protocols.

- The model could debut by June, competing directly with GPT-5 and Gemini Ultra.

- Enhanced multimodal capabilities may give Anthropic a competitive edge.

- Privacy-centric design addresses critical industry concerns about AI risks.