Anthropic Open-Sources AI Transparency Tool to Decode Model Decisions

The artificial intelligence research company Anthropic has taken a major step toward making AI systems more transparent with the release of its open-source "Circuit Tracing" tool. Announced on May 29, this innovative technology provides researchers with a way to visualize and analyze the decision-making processes of large language models (LLMs).

Visualizing the AI Thought Process

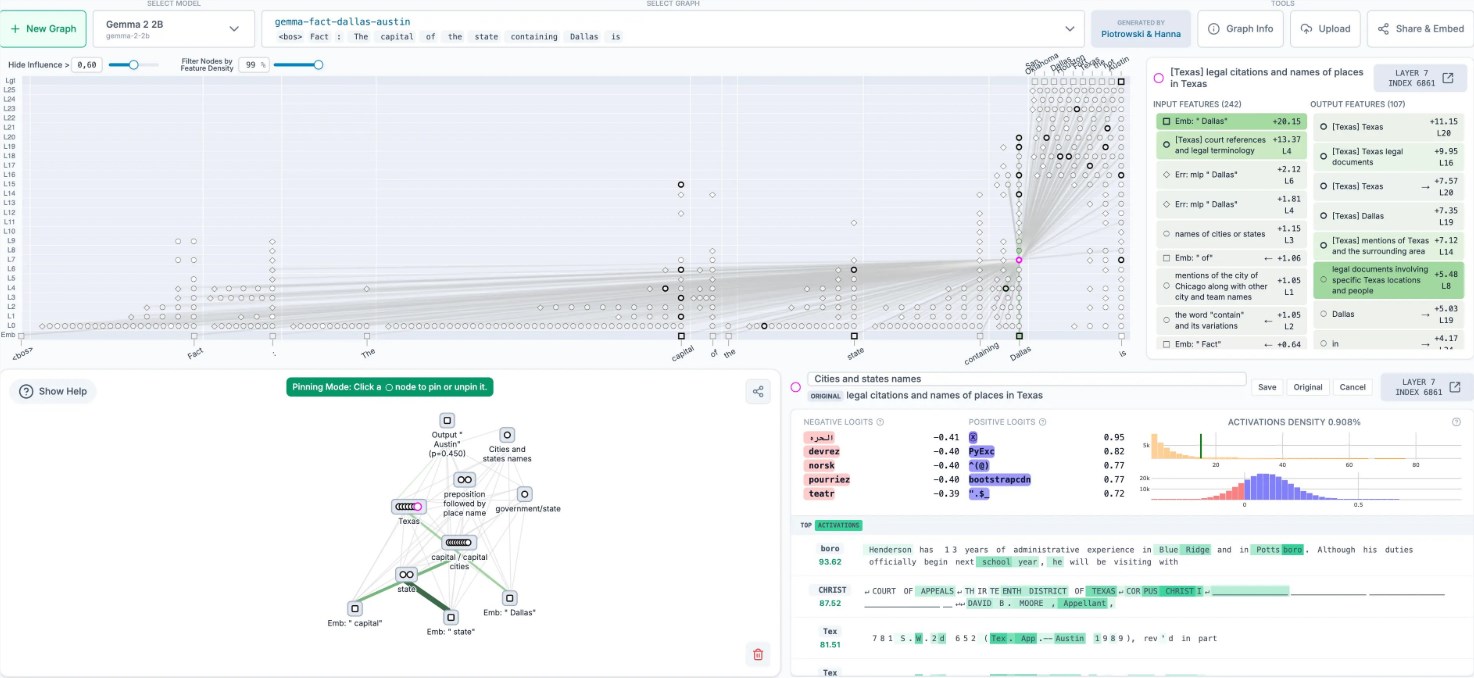

The Circuit Tracing tool creates detailed attribution graphs that map how information flows through an AI system from input to output. These graphs reveal which features and patterns the model prioritizes when generating responses - essentially showing the "thought process" behind AI decisions.

"This gives us a microscope to examine neural activity in ways we couldn't before," explained an Anthropic researcher. The tool identifies critical decision points where specific inputs trigger particular outputs, helping developers understand why models sometimes produce unexpected or biased results.

Interactive Analysis with Neuronpedia

To make these findings accessible, Anthropic integrated an interactive frontend called Neuronpedia. Researchers can now:

- Adjust input parameters in real-time

- Track how changes affect model outputs

- Test hypotheses about model behavior

The interface allows even non-experts to explore complex neural networks through intuitive visualizations. Detailed guides help users navigate the system and interpret results accurately.

Breaking Open the Black Box

AI transparency has become increasingly crucial as language models are deployed in sensitive areas like healthcare, finance, and legal systems. Anthropic's open-source approach enables broader collaboration on explainability research while addressing growing concerns about:

- Potential biases in model outputs

- Hallucinations or false information generation

- Ethical implications of opaque decision-making

The project was developed in partnership with Decode Research through Anthropic's Fellows program, demonstrating how academic collaborations can advance responsible AI development.

What This Means for AI's Future

Industry experts see Circuit Tracing as a potential game-changer for building trustworthy AI systems. As models become more transparent:

- Developers can optimize performance more effectively

- Organizations can implement better safeguards against errors

- Regulators gain tools to assess system reliability

The technology may also influence ongoing debates about AI governance by providing concrete data about how models actually function rather than relying on theoretical frameworks.

Key Points

- Anthropic's Circuit Tracing tool visually maps decision pathways in large language models

- Interactive Neuronpedia interface allows real-time experimentation with model parameters

- Open-source release enables broader research into AI explainability and safety

- Technology addresses critical concerns about bias, hallucinations and ethical deployment

- Could establish new standards for transparency in increasingly powerful AI systems