Aliyun's ThinkSound AI Revolutionizes Video Sound Effects

Alibaba Open-Sources Groundbreaking AI Audio Tool

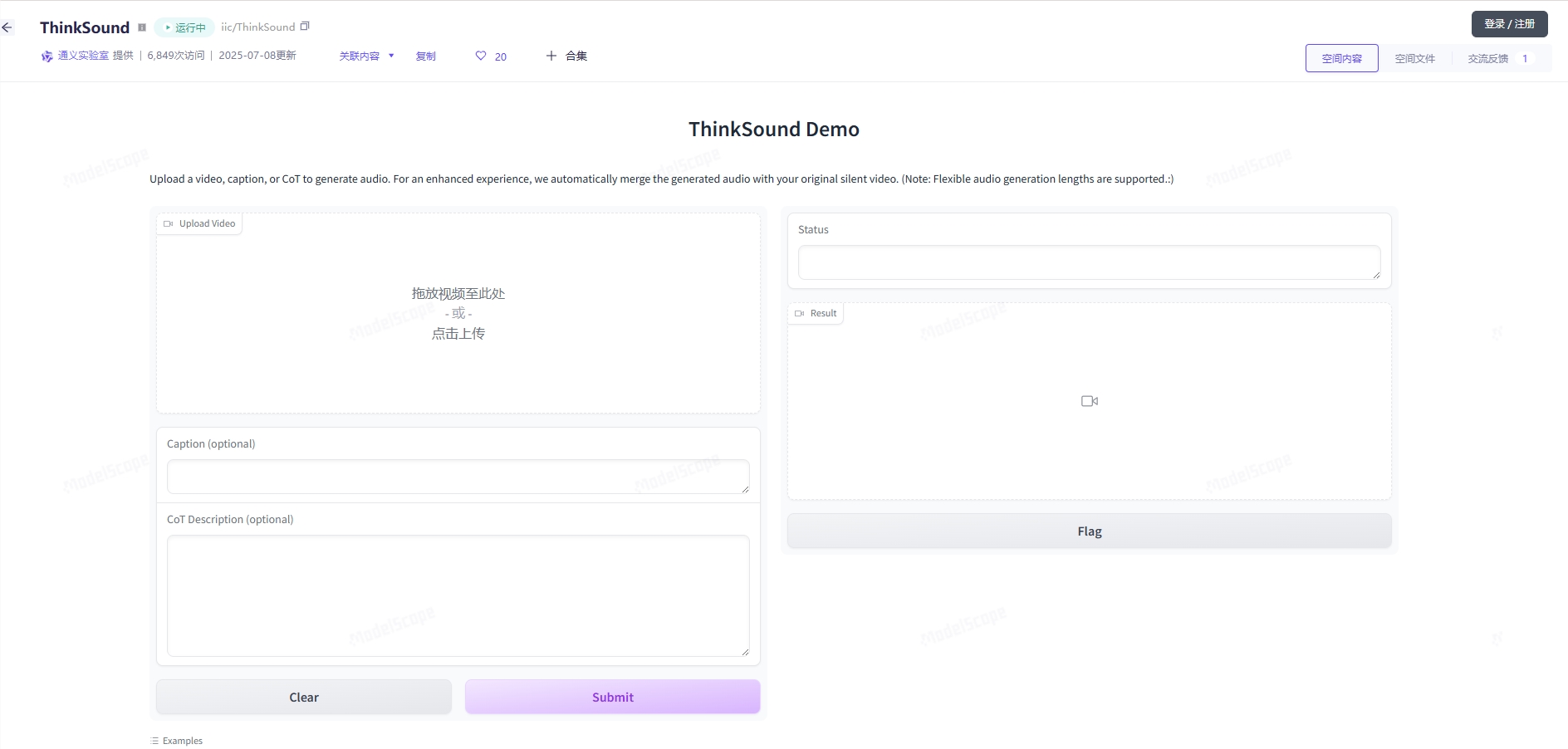

In a significant move for the creative industries, Alibaba's Tongyi Lab has open-sourced ThinkSound, its first audio generation model capable of automatically producing high-quality sound effects for videos. Released in July 2025, this multimodal AI represents a major leap forward in automated content creation.

The AI Sound Designer

ThinkSound functions as a virtual sound designer, using advanced Chain-of-Thought (CoT) technology to analyze video scenes, actions, and emotions. The system generates perfectly matched audio elements including:

- Environmental sounds (wind, water, urban noise)

- Character dialogues

- Object interaction effects

- Background music cues

The model accepts multiple input formats including video files, text descriptions, or existing audio clips - either separately or in combination. Users can refine outputs through natural language instructions.

Technical Breakthroughs

ThinkSound's architecture combines three core AI disciplines:

- Computer vision for frame-by-frame video analysis

- Natural language processing for text-based instructions

- Audio generation for high-fidelity sound production

The system demonstrates exceptional synchronization capabilities across various video formats (MP4, MOV, AVI, MKV) and resolutions up to 4K. Benchmark tests show superior performance in audio-visual alignment compared to existing solutions.

Open Source Accessibility

Alibaba has made ThinkSound's model weights and inference scripts publicly available through:

- Hugging Face

- ModelScope

- GitHub

This follows Alibaba's pattern of open-source contributions including the Qwen language model and Wan2.1 video generator (3.3M+ downloads combined). The move significantly lowers barriers for:

- Independent filmmakers

- Game developers

- Academic researchers

- Small creative studios

The package includes interactive editing features allowing precise sound effect adjustments via click-and-drag interfaces or voice commands.

Industry Applications

ThinkSound transforms workflows across multiple sectors:

Film & Television

- Automated post-production sound design

- Dialogue generation with lip-sync accuracy

- Rapid soundtrack prototyping ### Gaming

- Dynamic environmental audio generation

- Character voice synthesis

- Real-time sound effect creation ### Education & Media

- Accessible content creation tools

- Multilingual narration generation

- Interactive learning materials development Early adopters report dramatic reductions in production timelines while maintaining professional-grade audio quality.

Future Developments

The release positions Alibaba as a leader in multimodal AI alongside its existing innovations in video (Wan2.1) and speech generation (Qwen-TTS). Future updates may include:

- Enhanced emotional expression algorithms

- Personalized voice synthesis

- Real-time generation capabilities Industry analysts predict widespread adoption as secondary developers build specialized applications on the open-source platform. --- Key Points: (✓) First open-source multimodal audio generation model from Alibaba \(✓) Creates perfectly synchronized sound effects from video/text inputs \(✓) Supports professional formats up to 4K resolution \(✓) Available on major developer platforms including GitHub \(✓) Transforms film, gaming and education content production