Alibaba Open-Sources MoE-Based Video Generation Model

Alibaba Breaks New Ground with Open-Source Video AI

Alibaba Cloud has made a significant contribution to the AI community by open-sourcing its Tongyi Wanxiang Wan 2.2 video generation model. This release marks a major advancement in video synthesis technology, featuring three specialized models:

- Text-to-video (Wan 2.2-T2V-A14B)

- Image-to-video (Wan 2.2-I2V-A14B)

- Unified video generation (Wan 2.2-IT2V-5B)

Revolutionary MoE Architecture

The most groundbreaking aspect of Wan 2.2 is its implementation of Mixture of Experts (MoE) architecture, a first for video generation models. This innovative approach addresses the critical challenge of computational efficiency in video synthesis:

- Total parameters: 27 billion

- Active parameters: 14 billion

- Computational savings: ~50% compared to traditional architectures

The system employs specialized expert models:

- High-noise experts: Handle overall video composition

- Low-noise experts: Focus on detailed refinement

This division of labor enables superior performance in complex motion generation, character interactions, and aesthetic quality.

Cinematic Quality Control System

Wan 2.2 introduces a pioneering film aesthetics control system that brings professional-grade cinematic effects to AI-generated videos. Users can achieve specific visual styles through keyword combinations:

| Style Elements | Example Keywords | Resulting Effect |

|---|

The system demonstrates particular strength in rendering subtle details like micro-expressions and lighting transitions.

Accessible High-Performance Model

The open-source package includes a compact 5B parameter unified model designed for practical deployment:

- Supports both text-to-video and image-to-video functions

- Uses high-compression 3D VAE architecture (4×16×16 compression ratio)

- Generates 720p videos at 24fps

- Runs on consumer GPUs with just 22GB VRAM

This makes Wan 2.2 one of the most accessible high-quality video generation models currently available.

Availability and Impact

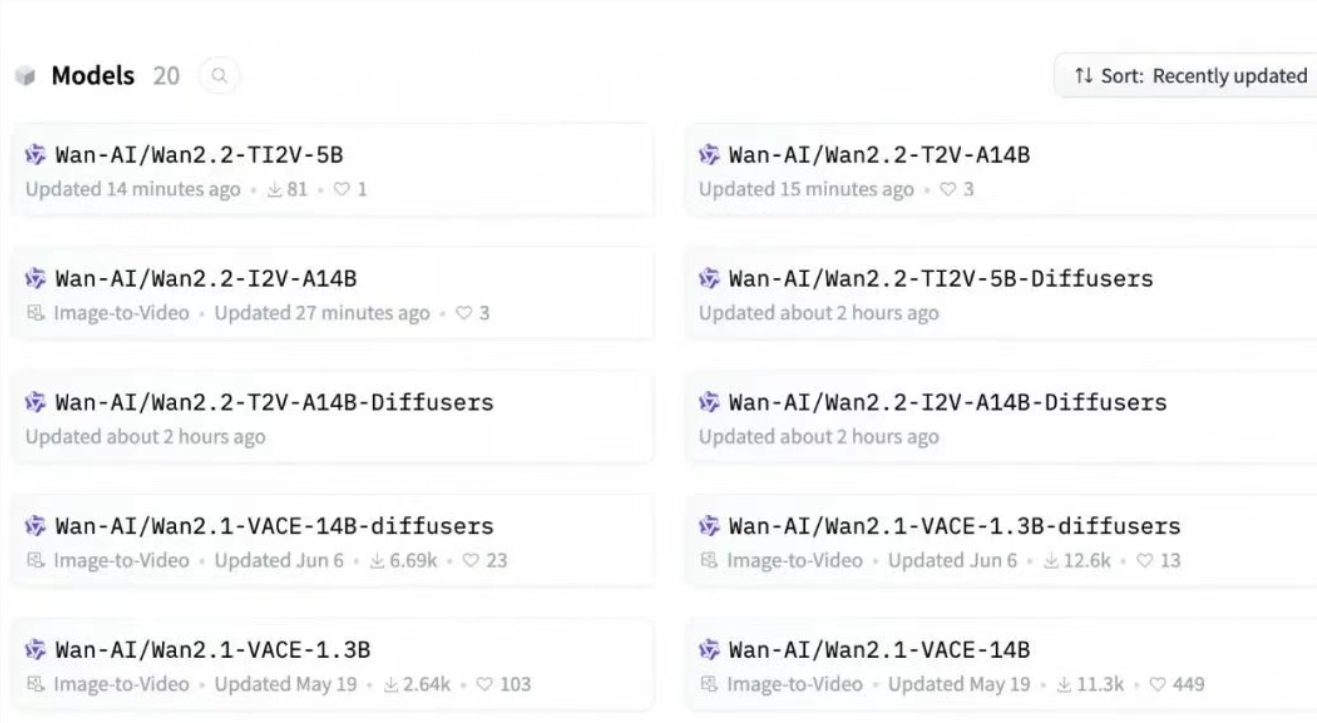

The models are accessible through multiple channels:

- Code repositories: GitHub, HuggingFace, Moda Community

- Cloud API: Alibaba Cloud BaiLian

- Direct experience: Tongyi Wanxiang website and app

Since February, Tongyi Wanxiang's open-source initiatives have seen over 5 million downloads, significantly advancing the field of AI video generation.

Key Points:

- First implementation of MoE architecture in video generation models

- 50% improvement in computational efficiency

- Professional-grade cinematic control system

- Consumer-GPU deployable unified model

- Available through multiple open-source platforms