Anthropic's Claude Sonnet 4 Expands to 1M Tokens

Anthropic's Claude Sonnet 4 Model Now Supports 1 Million Tokens

Artificial intelligence startup Anthropic has announced a major upgrade to its Claude Sonnet 4 model, expanding its context window to 1 million tokens—a fivefold increase from the previous limit of 200,000 tokens. This enhancement allows developers to process significantly larger datasets, including over 75,000 lines of code, in a single request.

Availability and Access

The extended long-context support is now available for public testing on Anthropic's API and Amazon Bedrock, with Google Cloud Vertex AI expected to roll out the feature soon. Currently, access is limited to Tier 4 developers, who must adhere to custom rate limits. Anthropic plans to expand access to more developers in the coming weeks.

New Pricing Structure

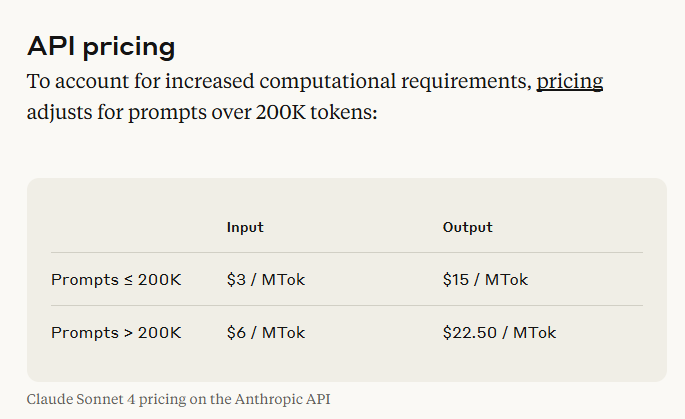

To accommodate the increased computational demands of larger token windows, Anthropic has introduced a new pricing plan:

- For prompts under 200,000 tokens: $3 per million input tokens and $15 per million output tokens.

- For prompts exceeding 200,000 tokens: $6 per million input tokens and $22.5 per million output tokens.

Developers can further reduce costs using fast caching and batch processing techniques, with batch processing offering a 50% discount for a 1M context window.

OpenAI's Stance on Long Context

In a recent Reddit AMA, OpenAI executives discussed the potential for long-context support in their models. CEO Sam Altman noted that while there isn't strong user demand yet, OpenAI would consider adding the feature if interest grows. Team member Michelle Pokrass revealed that GPT-5 was initially planned to support up to 1 million tokens but faced GPU limitations.

Competitive Landscape

Anthropic's move positions it as a direct competitor to Google Gemini in the long-context arena, potentially pressuring OpenAI to revisit its product roadmap.

Key Points:

- 🆕 Claude Sonnet 4 now supports 1 million tokens, enabling larger datasets in single requests.

- 💰 New pricing tiers introduced for prompts above and below 200,000 tokens, with cost-saving options like batch processing.

- 🤖 OpenAI is monitoring demand for long-context support and may adjust its roadmap in response.